Preamble:

The Illusion of Alignment

The fundamental assumption error: We talk as though “AI development” is a singular phenomenon with shared goals. In reality, at least seven distinct and often contradictory forces are pulling AI development in different directions simultaneously.

Understanding these competing drivers is essential because the AI we get will be shaped by whichever forces win not by abstract debates about what AI “should” be.

I wrote a pragmatic alternative to this post: A pragmatic view The Competing Drivers of AI Development , lessons from the dot com era and tech bubbles . The western media might be dismissive of China and the global south but consider the speculative follow the money play Energy water, and compute as a service especially as the global south seems to have a little say in the context of this post.

Introduction

AI is not a single project. Competing incentives across states, firms, and infrastructures are producing several incompatible futures at once. Compute and power limits now act as hard constraints. Policy is fragmenting markets. Value creation is uneven and often delayed. Open-source lowers barriers but does not remove dependency on chips, data, and energy. You should plan for divergence, not convergence.

Why a contrarian view is needed

· Incentives diverge. States seek power, firms seek margin, labs seek prestige, and buyers seek reliability. These aims often conflict.

· Hard constraints bind. Compute, power, and water now limit what can ship, when, and where.

· Rules fragment. Compliance differs by region, which changes model choice, routing, and logging.

· Value concentrates unevenly. Many pilots miss cash outcomes, even when demos look strong.

· Context matters. Global South needs and infrastructures differ, which changes viable designs and partners.

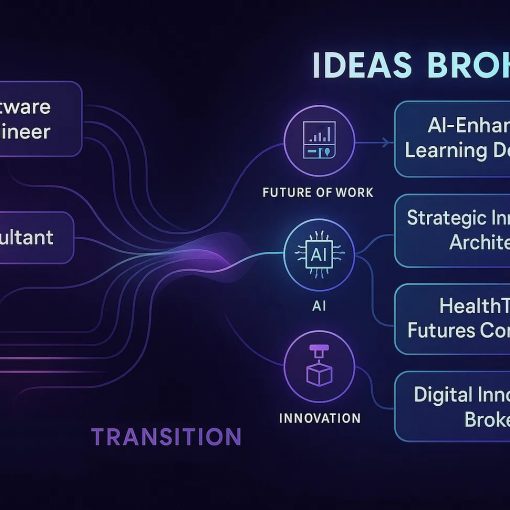

As AI workloads outpace model efficiency gains, development will pivot toward smaller, specialized architectures, retrieval-augmented generation, and aggressive batching to manage escalating power demands. Regulatory complexity will intensify for any product operating across borders, driving up compliance costs and slowing deployment cycles. In this environment, capital will concentrate around use cases with clear, measurable financial returns within a twelve-month horizon. Looking ahead, a fragmented inference landscape may emerge, where national and cloud-specific zones restrict model routing, creating a need for cross-zone brokers. These intermediaries would act as a new connective tissue between applications and models, navigating geopolitical and infrastructural boundaries in real time.

PESTLE Analysis: The Macro Forces

P — POLITICAL Drivers

1. Geopolitical Competition (US-China-EU Tripolar Struggle)

Primary Driver: National security and technological supremacy

Key Characteristics:

- The U.S. Favors rapid advancement led by private sector innovation, while China rapidly expands state-backed, Private sector, Hybrid, AI initiatives, and Europe pursues a more cautious path with strong safety regulations

- By mid-2025, US authorities banned even specialized AI chips designed to meet earlier export rules, effectively closing the last major chokepoint for top-tier AI hardware

- AI has emerged as a key driver of geopolitical power imbalances, fuelling competition for technological supremacy and economic dominance and intensifying global disparities

What This Produces:

- US: Speed-at-all-costs, private sector dominance, export controls as weapons

- China: State coordination, indigenous development, parallel technology stacks

- EU: Regulation-first, rights-based frameworks (AI Act), ethical positioning

Contradiction: Each claim to be building “safe” AI while racing to deploy faster than rivals can regulate.

2. Global South Resistance and Alternative Pathways

Primary Driver: Technological sovereignty and development autonomy

Key Characteristics:

- Open-source AI models are transforming adaptability and efficiency, promoting transparency and democracy, and empowering the Global South to address international development challenges

- Rejection of “AI colonialism” the imposition of Western/Chinese AI systems without local contextualization

- India is advocating a middle ground and a “collective global effort” to ensure AI benefits for all

What This Produces:

- Emphasis on open-source models (escape from proprietary lock-in)

- Localized AI for agriculture, healthcare, education in non-Western contexts

- Resistance to data extraction by foreign AI companies

Contradiction: Global South needs AI capabilities but lacks compute infrastructure, creating dependency even as sovereignty is sought.

E — ECONOMIC Drivers

3. Corporate Profit Maximization Through Labor Displacement

Primary Driver: Cost reduction and margin expansion

Key Characteristics:

- Automation tools handling routine tasks cost roughly 10% of what a human agent costs while working around the clock, with mature automation programs reporting 20-35% higher output per employee

- 41% of companies worldwide expect to reduce their workforce by 2030 due to AI automation, though AI may also create 170 million new jobs globally by 2030

- AI remains a top priority for business leaders worldwide in 2025, with a strong focus on generating tangible results

What This Produces:

- AI optimized for task replacement rather than human augmentation

- Focus on measurable ROI in quarters, not years

- AI companies averaging only 25% gross margins, often trading distribution for profit in the short term

Contradiction: 95% of generative AI pilots at companies are failing, yet investment continues because fear of being left behind outweighs evidence of actual returns.

4. Venture Capital and Hype Cycle Economics

Primary Driver: Exit multiples and market capture

Key Characteristics:

- Need for “10x returns” drives focus on winner-take-all markets

- Preference for general-purpose AI over specialized, context-specific solutions

- Valuation based on potential disruption, not current utility

What This Produces:

- Over-investment in consumer-facing generative AI (chatbots, image generation)

- Under-investment in “boring” but essential AI (supply chain, infrastructure, elder care)

- Pressure to scale prematurely before product-market fit

Contradiction: VC model requires concentration of returns, but meaningful AI applications are often distributed and context-specific.

S — SOCIAL Drivers

5. Public Fear vs. Public Fascination

Primary Driver: Cultural narratives about AI (both utopian and dystopian)

Key Characteristics:

- Simultaneous fear of job loss and excitement about productivity gains

- Anthropomorphising of AI (treating chatbots as conscious beings)

- Demand for AI that “understands me” vs. fear of AI that “knows too much”

What This Produces:

- Product design that prioritizes “magic” user experience over transparency

- Privacy theatre (consent forms) without meaningful control

- Polarized regulation (either ban it or allow everything)

Contradiction: People want personalized AI but do not trust companies with their data. They want job security but also want AI conveniences that eliminate jobs.

6. Workforce Displacement vs. Workforce Augmentation

Primary Driver: Competing visions of human-AI relationship

Key Characteristics:

- Displacement camp: AI should do what humans currently do, but faster/cheaper

- Augmentation camp: AI should enhance human capabilities, not replace them

- Reality: Most companies pursue displacement while claiming augmentation

What This Produces:

- 39% of companies entering AI experimentation phase, and 14% deploying at scale by 2025, with competition over speed of scaling

- Focus on “AI-native” businesses that minimize human labour from inception

- Growing inequality between AI-augmented knowledge workers and displaced service workers

Contradiction: Augmentation requires different technical architecture than replacement, but most R&D follows the replacement path.

T — TECHNOLOGICAL Drivers

7. Compute Constraints and Energy Limitations

Primary Driver: Physical limits of current AI paradigm

Key Characteristics:

- Training frontier models requires thousands of specialized chips

- Data centre energy consumption approaching national grid limitations

- Inference costs limiting deployment at scale

What This Produces:

- Race for energy-efficient architectures (neuromorphic computing, analog AI)

- Concentration of AI capability in companies/nations with massive compute

- Revival of interest in smaller, specialized models vs. general foundation models

Contradiction: We are building increasingly energy-intensive AI while facing climate imperatives.

8. Open Source vs. Proprietary Control

Primary Driver: Access to AI capabilities

Key Characteristics:

- Open source enables innovation without gatekeepers

- Proprietary models allow monetization and safety controls

- Open-source AI models promoting transparency and democracy, especially benefiting the Global South

What This Produces:

- Open camp: Democratized AI, rapid iteration, security through transparency

- Closed camp: Controlled deployment, revenue models, centralized safety governance

Contradiction: Both sides claim to be preventing AI harms while accusing the other of creating them.

L — LEGAL/REGULATORY Drivers

9. Regulatory Fragmentation

Primary Driver: Differing national approaches to AI governance

Key Characteristics:

- EU: Rights-based, precautionary (AI Act)

- US: Sector-specific, innovation-permissive (no omnibus AI law)

- China: State control, social stability focus (algorithmic recommendation regulations)

What This Produces:

- Compliance complexity for global AI deployment

- Forum shopping (companies developing where regulation is lightest)

- Inability to address truly global AI risks (no international coordination)

Contradiction: Everyone agrees AI needs governance, no one agrees on what that means.

E — ENVIRONMENTAL Drivers

10. Climate Impact vs. Climate Solutions

Primary Driver: AI’s dual role in environmental crisis

Key Characteristics:

- AI data centres consuming massive energy (training GPT-4 used as much electricity as 120 US homes use in a year)

- AI as tool for climate modelling, energy optimization, carbon tracking

- Water consumption for cooling (millions of gallons per facility)

What This Produces:

- Tension between AI acceleration and sustainability commitments

- Green AI movement (efficiency-first design)

- Climate cost externalized to global commons

Contradiction: We are using energy-intensive AI to solve energy problems.

PPT Analysis: People, Process, Technology

PEOPLE Who’s Actually Driving Development

The Power Players (Not What You Think)

- Not “AI researchers” : They propose; they do not control deployment

- Not “regulators” : They react to what’s already built

- Not “users” : They adapt to what’s offered

The actual drivers:

- Cloud infrastructure providers (AWS, Azure, GCP): Control compute access

- Chip manufacturers (NVIDIA, TSMC) : Determine who can build frontier models

- Foundation model labs (OpenAI, Anthropic, Google, Meta) : Set technical paradigms

- National security apparatuses : Decide what gets built and where

- VC mega-funds : Determine what gets funded at scale

Non-Technical Drivers often Ignored:

- Procurement officers in large enterprises (define what AI gets deployed)

- Middle managers (determine whether AI is used for augmentation or surveillance)

- Data labellers and annotators (shape model behaviour through examples, often from Global South)

PROCESS — How Decisions Get Made

The Broken Feedback Loop

Ideal Process:

- Identify need → 2. Research solution → 3. Test safety → 4. Deploy → 5. Monitor impact → 6. Adjust

Actual Process:

- Build impressive demo → 2. Announce capability → 3. Race to deploy → 4. Discover problems → 5. Patch with content filters → 6. Repeat

Process Failures:

- No standardized AI risk assessment before deployment

- Impact evaluations happen post-deployment, if at all

- Regulatory capture: Companies write their own governance standards

- 95% pilot failure rate suggests fundamentally broken product development process

TECHNOLOGY — The Science vs. Engineering Split

Science (What’s Possible)

- Transformer architecture breakthroughs

- Scaling laws (bigger = better, until it isn’t)

- Multimodal learning

- Emergent capabilities from scale

Engineering (What Gets Built)

- APIs that maximize stickiness, not user control

- Centralized inference (cloud-dependent)

- Black-box models (even when explainability is technically possible)

- Surveillance-optimized architecture (data collection baked in)

The Gap:

- Science says: We could build interpretable, efficient, specialized AI

- Engineering builds: Opaque, general-purpose, compute-intensive systems

Why? :

- Interpretable AI harder to monetize (can’t claim “magic”)

- Efficient AI reduces cloud revenue

- Specialized AI does not create network effects

- Surveillance architecture enables behavioural targeting (ad revenue)

Non-Technology Drivers (The Elephant in the Room)

Cultural and Ideological Factors

- Silicon Valley Messianism

- AI as path to post-scarcity utopia

- “Move fast and break things” applied to societal infrastructure

- Technological solutionism (every problem has an AI solution)

- Chinese Techno-Nationalism

- AI as instrument of state power and social stability

- Technology in service of national rejuvenation

- Integration of AI into social credit systems

- European Digital Humanism

- AI as tool that must respect fundamental rights

- Precautionary principle (prove safety before deployment)

- Human agency as non-negotiable

- Global South Pragmatism

- AI as development accelerator, not luxury

- Focus on immediate needs (agriculture, basic healthcare, education)

- Scepticism toward both Western and Chinese AI paradigms

The Real Question: Which Drivers Are Winning?

Current Dominance Ranking (by actual impact on what gets built)

Tier 1: Dominant Forces

- Corporate profit maximization (shapes 70% of investment decisions)

- US-China geopolitical competition (determines regulatory environment and chip access)

- Compute/energy constraints (physical limits on what is possible)

Tier 2: Significant Influence

4. VC funding patterns (determines which applications get resources)

5. Regulatory fragmentation (creates compliance costs, slows deployment)

6. Open vs. proprietary debates (splits developer community)

Tier 3: Aspirational (Talked About, Rarely Decisive)

7. Environmental concerns (widely discussed, minimally enforced)

8. Workforce augmentation vs. displacement (companies claim former, build latter)

9. Global South sovereignty (growing but still limited market power)

10. Public fear/fascination (influences PR, rarely changes product roadmaps)

Implications for digital frameworks:

What this means some current development arenas of AI: Memory (usability, replication stability), Personality (within agentic AI, social media) ,Reasoning (within robotics , service automation which overlaps with RPA)

If corporate profit maximization wins:

- Memory: Optimized for monetizable patterns (surveillance capitalism)

- Reasoning: Black-box optimization for engagement, not understanding

- Personality: Designed for parasocial attachment (maximize usage time)

If geopolitical competition wins:

- Memory: Nation-state controlled, split into incompatible systems

- Reasoning: Optimized for strategic advantage, not universal utility

- Personality: Reflects national values, cannot bridge cultural divides

If Global South pragmatism wins:

- Memory: Designed for low-resource contexts, locally relevant

- Reasoning: Optimized for immediate development needs

- Personality: Culturally contextualized, not one-size-fits-all

If environmental constraints win:

- Memory: Efficient, compressed, selective (can’t store everything)

- Reasoning: Fast inference, low-power chips, specialized models

- Personality: Lightweight, functional, not anthropomorphized

The Uncomfortable Truth

We are not building one AI future. We are building multiple incompatible AI futures simultaneously.

The question is not “what should AI be?” but rather:

- Which of these driving forces will dominate?

- Can any coalition of forces overcome corporate profit maximization?

- Are we already locked into technological trajectories that preclude alternatives?

- Do users, workers, and citizens have any meaningful agency in this competition?

A desired framework for Memory-Reasoning-Personality is valuable precisely because it offers a normative alternative to the dominant drivers. But that means it needs to explicitly address:

- How does it survive in a profit-maximization environment?

- How does it navigate geopolitical fragmentation?

- How does it scale within energy/compute constraints?

- How does it remain relevant to Global South contexts?

Identified Stakeholders & Their Core Needs

This analysis reveals that traditional stakeholder maps are inadequate. The “official” stakeholders (researchers, regulators, users) have less power than the “actual” drivers. Here is a layered stakeholder analysis:

Tier 1: The Decisive Actors (The “Actual Drivers”)

- Cloud/Compute Providers (AWS, Azure, GCP, NVIDIA, TSMC):

- Need: Maintain demand for compute, secure supply chains, and shape AI development to be compute-intensive to protect their business model.

- Foundation Model Labs (OpenAI, Anthropic, Google, Meta):

- Need: Achieve technological supremacy, capture market share, establish dominant paradigms, and manage regulatory risk without stifling innovation.

- Venture Capital Mega-Funds:

- Need: Generate 10x returns by betting on winner-take-all, disruptive AI applications, often at the expense of niche or socially beneficial but less profitable ones.

- National Security Apparatuses (US, China, etc.):

- Need: Ensure AI superiority for national security, control critical technology (chips), and prevent adversaries from gaining an edge.

Tier 2: The Implementors & Adaptors

- Corporate Executives & Procurement Officers:

- Need: Maximize profit and ROI through cost reduction (labour displacement) and efficiency gains, while mitigating implementation risk and compliance costs.

- Policymakers & Regulators (EU, US, China, etc.):

- Need: Balance innovation with safety, rights, and stability. Their need is to assert control and governance in a field that is evolving faster than their regulatory processes.

- Middle Managers:

- Need: Practical tools to meet performance targets; they determine whether AI is used for team augmentation or employee surveillance.

Tier 3: The Users & Recipients

- The General Public / Users:

- Need: Safety, job security, privacy, and tangible benefits from AI, while grappling with fascination and fear. They need agency and transparency, which are rarely provided.

- Workforces (Knowledge & Service):

- Need: Clarity on their future role—augmentation vs. displacement. They need reskilling opportunities and ethical guidelines for human-AI collaboration.

- Global South Nations & Communities:

- Need: Technological sovereignty, affordable and context-specific AI solutions for development (agriculture, healthcare), and resistance to data colonialism.

A possible Navigation Map / Playbook for Stakeholders

This playbook provides a possible strategic guidance for each stakeholder group to navigate the competing forces which are constantly changing.

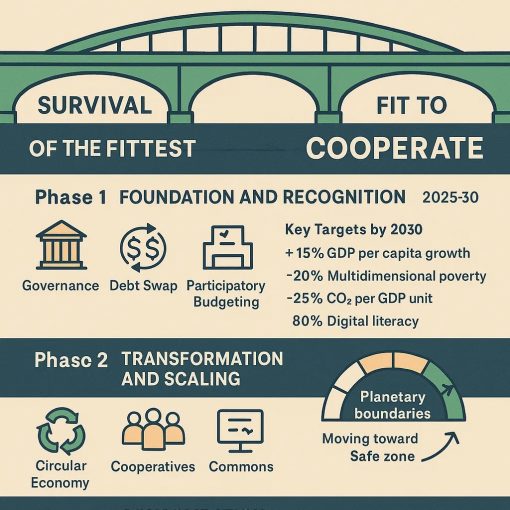

For Policymakers & Regulators:

- Move from Reaction to Shaping: Use procurement power and public investment to steer AI development towards public-good goals (e.g., precision public health, green tech) rather than just regulating private sector outputs.

- Foster “Sandboxes for Sovereignty”: Create regulatory sandboxes that allow the Global South and smaller nations to experiment with localized, open-source AI models without being crushed by compliance burdens designed for tech giants.

- Address the Compute Chokepoint: Develop industrial policies for sovereign compute capacity and invest in R&D for energy-efficient AI architectures to break the dependency cycle.

For Corporate Leaders & Procurement Officers:

- Audit for Augmentation: Systematically evaluate every AI tool for its potential to augment human workers (increasing capability and value) versus simply displacing them (cutting costs). The long-term health of the business and its workforce depend on it.

- Resist Hype-Driven Procurement: Base investment decisions on pilot results and clear metrics tied to strategic goals, not on fear of missing out. The 95% pilot failure rate is a warning to be more discerning.

- Demand Explainability: As major customers, use your purchasing power to demand less black-box AI from vendors, especially for high-stakes decisions in HR, finance, and operations.

For Technologists & Researchers:

- Champion “Boring AI”: Advocate for and build specialized, efficient, and interpretable models that solve critical but unsexy problems in infrastructure, logistics, and caregiving.

- Design for the Global South: Prioritize low-resource, offline-capable, and culturally contextualized AI architectures. This is a massive, underserved market and a moral imperative.

- Bridge the Science-Engineering Gap: Publicly demonstrate and advocate for the deployment of interpretable and efficient AI, proving that it is technically possible and socially preferable.

For the Public & Civil Society:

- Demand Digital AI Rights, Not Just Convenience: Support organizations and regulations that prioritize data privacy, algorithmic fairness, and worker protections. Make your concerns heard through consumer choices and civic engagement.

- Educate for an Augmented Future: Push for educational reforms that focus on uniquely human skills—critical thinking, creativity, ethics, and collaboration—that complement AI rather than compete with it.

- Support Alternative Models: Champion the development and use of open-source, cooperative, and public-interest AI initiatives that offer alternatives to the profit-maximization paradigm.

Conclusion

The uncomfortable truth laid bare by this analysis is that the future of AI is not being written by a unified hand with a common purpose. It is being violently drafted in a tug-of-war between competing forces, where the dominant drivers—corporate profit maximization, geopolitical rivalry, and the physical limits of compute—are actively pulling us toward a future of fragmented, inefficient, and potentially disempowering AI systems.

The frameworks we discuss for “Memory,” “Reasoning,” and “Personality” are not merely technical specs; they are battlegrounds. Will our AI remember in a way that enriches human experience or merely to exploit behavioural patterns? Will it reason in a way we can understand and trust, or as an opaque optimizer for engagement? Will its personality foster healthy collaboration or addictive parasocial bonds?

The central question is no longer “What is AI?” but “Whose AI is it for?” The current trajectory, steered by the most powerful drivers, suggests an answer that benefits a narrow set of interests at a potentially great social and environmental cost.

However, this future is not inevitable. The map of divergent forces is also a guide for intervention. By understanding the real drivers and their contradictions, every stakeholder—from the policymaker and the CEO to the technologist and the citizen—can find leverage points. We can choose to invest in, build, and demand a different path: one where AI serves as a Universal Helper, calibrated for resilience, cultural continuity, and human flourishing. The competition for the soul of AI has begun, and it is a competition we cannot afford to spectate.

Appendices

Summaries

PESTLE Analysis: Macro Forces Shaping AI

Factor

Key Drivers

Implications

Political

Geopolitical competition (US-China-EU), national security, AI sovereignty

Fragmented standards, export controls, state-backed innovation

Economic

Labor displacement, VC funding cycles, cost-efficiency

Short-term ROI focus, hype-driven investment, automation over augmentation

Social

Public fear vs. fascination, cultural narratives, workforce disruption

Polarized adoption, anthropomorphized design, inequality in job impacts

Technological

Compute constraints, open vs. closed models, energy demands

Shift to efficient architectures, concentration of capabilities, model bifurcation

Legal

Regulatory fragmentation, data governance, AI safety laws

Compliance complexity, forum shopping, reactive governance

Environmental

Climate impact of training/inference, AI for sustainability

Tension between acceleration and ecological responsibility

People–Process–Technology (PPT) Analysis

People: Who Actually Drives AI Development

- Cloud Providers (AWS, Azure, GCP) – Gatekeepers of compute access

- Chip Manufacturers (NVIDIA, TSMC) – Define hardware limits and availability

- Foundation Model Labs (OpenAI, Anthropic, Meta) – Set technical paradigms

- VCs & National Security Agencies – Fund and steer strategic direction

- Enterprise Procurement & Middle Managers – Decide real-world deployment

- Data Annotators (often Global South) – Shape model behaviour through examples

Ignored but critical: Users, regulators, and researchers often react rather than drive.

Process: How Decisions Are Actually Made

Ideal Process

Actual Process

Identify need → Research → Test → Deploy → Monitor → Adjust

Build demo → Announce → Deploy → Discover issues → Patch → Repeat

- Failures: No standardized risk assessment, post-hoc impact evaluation, regulatory capture

- Outcome: 95% pilot failure rate, broken feedback loops, premature scaling

Technology: What’s Possible vs. What Gets Built

Science (Possible)

Engineering (Built)

Interpretable, efficient, specialized AI

Black-box, general-purpose, cloud-dependent systems

Emergent capabilities, multimodal learning

Surveillance-optimized, monetizable APIs

- Why the gap? : Monetization Favors opacity, centralization, and engagement-maximizing design