Preamble

The AI First Look Framework is an approach for AI project assessment, providing a rapid 3-5 days (up to 4 weeks depending on size and complexity) evaluation process to determine whether AI projects should proceed to full discovery. This framework addresses the unique challenges of AI projects including ethical considerations, data readiness, and technical feasibility while maintaining the collaborative, user-centered approach of the original methodology.

This AI First Look Framework should be regularly reviewed and updated based on organizational learning, industry best practices, and evolving AI governance standards. The framework is designed to be flexible and adaptable to different organizational contexts while maintaining its core principles of rapid assessment, ethical screening, and user-centered design. This post is related to: Performing AI Discovery in the Age of Enterprise Transformation

Idea trigger: Consider creating an AI interactive agent or custom GPT to perform a first look (I will create an custom GPT in the future) .

Note There is a section below on the requirements for an AI project submission gateway

1. Framework Overview

1.1 Purpose and Objectives

The AI First Look serves as a rapid assessment that enables teams to:

- Quickly assess AI project viability without substantial resource investment

- Identify AI-specific risks and opportunities early in the process

- Determine appropriate next steps (Full AI Discovery, Non-AI solution, business transformation , Hybrid or project termination)

- Ensure stakeholder alignment on AI project scope and expectations

- Prevent AI solution bias by maintaining solution-agnostic approach

1.2 Key Adaptations for AI Projects

Unlike traditional digital projects, AI First Looks must address:

- AI vs Non-AI Classification: Determining if AI is actually needed

- Data Readiness Assessment: Evaluating data availability and quality

- Ethical Screening: Early identification of ethical risks and concerns

- Technical Feasibility: AI-specific infrastructure and capability requirements

- Regulatory Compliance: AI-specific legal and regulatory considerations

2. The AI First Look Process

2.1 Duration and Resources

Timeline: 3-5 days maximum Team Size: 2-3 people (AI-enabled team) Output: AI Project Profile with clear next-step recommendations

2.2 Core Team Composition

Essential Roles:

- AI Discovery Lead (experienced in AI projects and User research)

- AI Technical Advisor (data scientist or AI engineer)

- Ethics Advisor (part-time involvement for screening)

Extended Support:

- Business Analyst (for complex business cases)

- Legal/Compliance Advisor (for high-risk projects)

3. AI First Look Methodology

3.1 The 10 Pillars of AI Discovery Research

Adapting the original 8 pillars for AI-specific needs:

- Environment – AI literacy and organizational readiness

- Scope – Problem definition and solution boundaries

- Recruitment and Admin – Stakeholder engagement and project management

- Data and Knowledge Management – Data availability, quality, and governance

- People – Team capabilities and change management

- Organizational Context – AI strategy alignment and support

- Governance – AI ethics, compliance, and risk management

- Tools and Infrastructure – AI platforms, computing resources, and integration

- AI Classification – Determining if AI is the appropriate solution

- Ethical Impact – Early ethical screening and risk assessment

3.2 Semi-Structured AI Interview Framework

Preparation Phase:

- Review submission through AI lens

- Prepare AI-specific questions

- Set up collaborative digital whiteboard

- Share AI discovery principles and methodology

Interview Structure (90-120 minutes):

Opening (15 minutes)

- Introductions and role clarification

- Recording consent and data handling

- Overview of AI discovery process

- Explanation of solution-agnostic approach

Core Assessment Areas (75-90 minutes)

1. Vision and Intent (10-15 minutes)

- What do you hope AI will achieve for your organization/users?

- Why do you believe AI is needed for this problem?

- What would success look like?

- Do you have predetermined AI solutions in mind?

2. Problem Definition (15-20 minutes)

- Who experiences this problem and how?

- What is the current impact of this problem?

- What happens if this problem remains unsolved?

- How do people currently work around this problem?

- What have you tried before?

3. AI Suitability Assessment (10-15 minutes)

- Does this problem involve pattern recognition or prediction?

- Is there sufficient data to train AI models?

- Would a rule-based system be sufficient?

- Are there humans currently performing this task successfully?

- Does the problem require human judgment or creativity?

4. Data Landscape (15-20 minutes)

- What data do you have access to?

- How is this data currently stored and managed?

- Who owns and controls this data?

- What are the quality and completeness of your datasets?

- Are there any data sharing restrictions or privacy concerns?

5. Stakeholder and Impact Mapping (10-15 minutes)

- Who would be affected by an AI solution?

- Are there vulnerable or marginalized groups involved?

- What are the potential positive and negative impacts?

- Who are the key decision-makers and influencers?

- How would users interact with an AI system?

6. Technical and Organizational Readiness (10-15 minutes)

- What technical infrastructure currently exists?

- What AI skills and expertise do you have in-house?

- How does this align with your digital/AI strategy?

- What budget and timeline constraints exist?

- Who would maintain and operate an AI system?

Closing (15 minutes)

- Summary of key points

- Next steps explanation

- Questions and clarifications

- Feedback on the process

4. AI-Specific Assessment Criteria

4.1 AI Classification Decision Tree

Primary Questions:

- Does the problem require learning from data patterns?

- Is the problem too complex for rule-based solutions?

- Is there sufficient quality data available?

- Are there clear success metrics for AI performance?

Classification Outcomes:

- Strong AI Candidate: Proceed to full AI discovery

- Weak AI Candidate: Explore hybrid or non-AI solutions

- Non-AI Solution: Redirect to traditional digital discovery

- Insufficient Information: Require additional data/research

4.2 Ethical Red Flags Assessment

Immediate Concerns:

- High-risk decision making (employment, healthcare, criminal justice)

- Vulnerable population impact

- Potential for bias or discrimination

- Privacy and surveillance implications

- Lack of transparency requirements

Escalation Triggers:

- Any red flags identified require ethics committee involvement

- High-risk projects need full ethical impact assessment

- Regulatory compliance concerns need legal review

4.3 Data Readiness Evaluation

Critical Factors:

- Volume: Sufficient data for training and validation

- Quality: Accurate, complete, and relevant data

- Accessibility: Legal and technical access to data

- Governance: Clear data ownership and usage rights

- Bias: Potential for skewed or unrepresentative data

Readiness Levels:

- Ready: High-quality, accessible data with clear governance

- Developing: Some data available but gaps or quality issues

- Not Ready: Insufficient or inaccessible data for AI development

5. AI First Look Outputs

5.1 AI Project Profile

Executive Summary:

- Problem statement and business case

- AI suitability assessment and recommendation

- Key risks and mitigation strategies

- Resource requirements and timeline estimates

Detailed Sections:

- Problem Analysis: User needs, pain points, and impact assessment

- AI Classification: Technical feasibility and approach recommendations

- Data Assessment: Data availability, quality, and governance status

- Ethical Screening: Identified risks and recommended safeguards

- Stakeholder Map: Key players, supporters, and potential blockers

- Technical Requirements: Infrastructure, skills, and integration needs

- Next Steps: Specific recommendations with rationale

5.2 Decision Recommendations

Option 1: Proceed to Full AI Discovery

- Criteria: Strong AI case, data readiness, manageable risks

- Next steps: Full 8-12 week AI discovery process

- Resource allocation: Dedicated AI discovery team

Option 2: Hybrid/Phased Approach

- Criteria: Moderate AI case, some data/technical gaps

- Next steps: Address gaps while exploring AI possibilities

- Resource allocation: Mixed team with AI consultation

Option 3: Non-AI Digital Solution

- Criteria: Problem doesn’t require AI, simpler solutions available

- Next steps: Traditional digital discovery or direct development

- Resource allocation: Standard digital team

Option 4: Not Ready/No-Go

- Criteria: Insufficient data, high risks, unclear problem

- Next steps: Problem refinement or project termination

- Resource allocation: Minimal – feedback and guidance only

6. AI First Look Best Practices

6.1 Interview Principles

AI-Specific Adaptations:

- Maintain AI Solution Agnosticism: Avoid leading toward AI solutions

- Focus on Problem, Not Technology: Understand the core issue first

- Assess Data Realism: Be honest about data requirements and availability

- Consider Ethical Implications Early: Screen for potential harm or bias

- Evaluate Human-AI Interaction: Understand how AI would fit into workflows

- Question AI Necessity: Challenge assumptions about needing AI

6.2 Common AI Discovery Pitfalls to Avoid

Solution Bias:

- Assuming AI is always the best solution

- Ignoring simpler, more effective alternatives

- Following AI trends rather than user needs

Data Overconfidence:

- Underestimating data quality issues

- Ignoring data accessibility barriers

- Overlooking bias in historical data

Ethical Blindness:

- Missing potential harm to vulnerable groups

- Underestimating fairness and transparency needs

- Ignoring regulatory compliance requirements

6.3 Stakeholder Management

Setting Expectations:

- Explain AI discovery process and timeline

- Clarify that AI may not be the recommended solution

- Outline commitment required from stakeholders

- Establish communication channels and feedback loops

Managing AI Hype:

- Address unrealistic expectations about AI capabilities

- Explain limitations and risks of AI solutions

- Focus on user value rather than technological novelty

- Provide realistic timelines and resource requirements

7. Quality Assurance and Continuous Improvement

7.1 AI First Look Review Process

Internal Team Review:

- Technical feasibility assessment validation

- Ethical screening completeness check

- Data readiness evaluation verification

- Stakeholder engagement effectiveness review

External Validation:

- Ethics committee review for high-risk projects

- Technical architecture board input for complex cases

- Legal/compliance review for regulated domains

- User representative feedback where appropriate

7.2 Success Metrics

Process Metrics:

- Time from submission to First Look completion

- Stakeholder satisfaction with the process

- Quality of recommendations (post-project validation)

- Resource efficiency compared to full discovery

Outcome Metrics:

- Percentage of AI First Looks leading to successful AI projects

- Early identification of non-AI solutions

- Reduction in failed AI projects

- Improvement in AI project ethical compliance

7.3 Continuous Improvement Framework

Regular Reviews:

- Monthly team retrospectives on First Look effectiveness

- Quarterly analysis of recommendation accuracy

- Annual review of methodology and tools

- Ongoing feedback integration from stakeholders

Methodology Updates:

- Integration of new AI governance standards

- Adaptation to emerging AI technologies

- Incorporation of regulatory changes

- Enhancement based on lessons learned

8. Tools and Templates

8.1 AI First Look Toolkit

Assessment Tools:

- AI Classification Decision Tree

- Data Readiness Evaluation Matrix

- Ethical Risk Screening Checklist

- Stakeholder Impact Assessment Grid

- Technical Feasibility Evaluation Framework

Documentation Templates:

- AI Project Profile Template

- Semi-Structured Interview Guide

- Stakeholder Communication Plan

- Risk Assessment and Mitigation Plan

- Recommendation Decision Matrix

8.2 Digital Collaboration Tools

Recommended Platforms:

- Collaborative whiteboarding (Miro, Mural)

- Document sharing and version control

- Video conferencing with recording capability

- Project management and tracking tools

- Secure data sharing and storage solutions

9. Implementation Guidance

9.1 Getting Started

Phase 1: Team Preparation (1-2 weeks)

- Train team on AI First Look methodology

- Set up tools and templates

- Establish governance and approval processes

- Create communication channels and workflows

Phase 2: Pilot Projects (4-6 weeks)

- Run 3-5 AI First Looks as pilots

- Gather feedback and refine processes

- Validate tools and templates

- Establish quality assurance procedures

Phase 3: Full Implementation (Ongoing)

- Launch AI First Look service

- Monitor and improve processes

- Scale team as needed

- Integrate with broader AI governance framework

9.2 Change Management

Stakeholder Education:

- Communicate benefits of AI First Look approach

- Address concerns about additional process overhead

- Demonstrate value through pilot project results

- Provide training and support for submission process

Cultural Adaptation:

- Encourage solution-agnostic thinking

- Promote ethical considerations in AI projects

- Build confidence in AI discovery methodology

- Celebrate early wins and learning outcomes

Conclusion

This AI First Look Framework should be regularly reviewed and updated based on organizational learning, industry best practices, and evolving AI governance standards. The framework is designed to be flexible and adaptable to different organizational contexts while maintaining its core principles of rapid assessment, ethical screening, and user-centered design.

AI Project Submission Gateway

Purpose

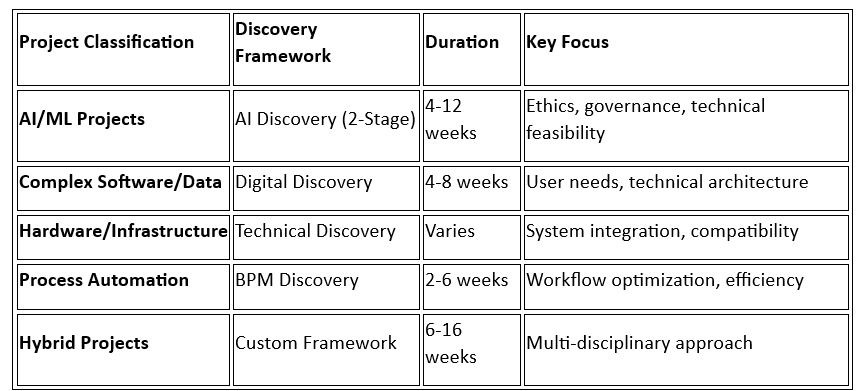

This gateway serves as the initial assessment point for all technology initiatives, helping organizations classify projects and route them to the appropriate discovery framework. Complete this assessment before beginning any discovery process. It is usually deployed as a website or part of an automated business process. This is an outline and I think it too complex adapt as needed

This gateway assessment should be completed collaboratively with key stakeholders and reviewed by the Discovery Lead before proceeding to any discovery framework.

Section 1: Project Classification Assessment

1.1 Project Type Identification

Select the PRIMARY category that best describes your initiative:

- [ ] AI/Machine Learning Project – Involves predictive models, pattern recognition, natural language processing, computer vision, or automated decision-making

- [ ] Data Analytics/BI Project – Focuses on reporting, dashboards, data warehousing, or descriptive analytics without predictive modeling

- [ ] Software Development – Traditional application development, system integration, or platform enhancement

- [ ] Hardware/Infrastructure – Physical equipment, networking, servers, or IT infrastructure changes

- [ ] Process Automation (Non-AI) – Workflow automation, RPA, BPM, or rule-based automation without learning capabilities

- [ ] Digital Service/Platform – Customer-facing digital services, websites, or user experience improvements

- [ ] Hybrid/Multiple Categories – Combines elements from multiple categories above

1.2 AI Classification Deep Dive

If you selected “AI/Machine Learning Project” or “Hybrid”, complete this section:

AI Type Classification (Select all that apply):

- [ ] Supervised Learning – Training models with labeled data for prediction/classification

- [ ] Unsupervised Learning – Pattern discovery in unlabeled data (clustering, anomaly detection)

- [ ] Reinforcement Learning – Learning through interaction and feedback

- [ ] Natural Language Processing – Text analysis, chatbots, language translation

- [ ] Computer Vision – Image/video analysis, object recognition, facial recognition

- [ ] Generative AI – Content creation, large language models, synthetic data generation

- [ ] Decision Support Systems – AI-assisted human decision making

- [ ] Autonomous Systems – Fully automated decision-making with minimal human oversight

- [ ] Expert Systems – Rule-based AI mimicking human expertise

- [ ] Hybrid AI – Combines multiple AI approaches

AI Complexity Level:

- [ ] Proof of Concept – Experimental, limited scope, research-focused

- [ ] Pilot/MVP – Small-scale implementation with defined user group

- [ ] Production System – Full-scale deployment affecting core business operations

- [ ] Enterprise Platform – Large-scale AI infrastructure serving multiple use cases

Section 2: Strategic Alignment Assessment

2.1 Business Context

Problem Statement (150 words max):

[Describe the specific business problem or opportunity this project addresses]

Strategic Priority Level:

- [ ] Critical – Essential for business survival or regulatory compliance

- [ ] High – Significant competitive advantage or efficiency gain

- [ ] Medium – Moderate business improvement

- [ ] Low – Nice-to-have or experimental

Primary Stakeholders:

- [ ] Executive Leadership

- [ ] End Users/Customers

- [ ] Operations Teams

- [ ] IT/Technology Teams

- [ ] Regulatory/Compliance

- [ ] External Partners/Vendors

2.2 Organizational Readiness

Data Readiness:

- [ ] High-quality, accessible data available

- [ ] Some data available but requires cleanup/integration

- [ ] Limited data, significant collection/preparation needed

- [ ] No relevant data currently available

Technical Infrastructure:

- [ ] Robust cloud/on-premise infrastructure ready

- [ ] Basic infrastructure, some enhancement needed

- [ ] Limited infrastructure, significant investment required

- [ ] No relevant infrastructure in place

Team Capabilities:

- [ ] AI/ML expertise available in-house

- [ ] Some technical skills, training/hiring needed

- [ ] Limited technical capabilities

- [ ] No relevant expertise available

Section 3: Governance & Risk Assessment

3.1 Regulatory & Compliance Requirements

Select all applicable regulatory frameworks:

- [ ] GDPR (Data Protection)

- [ ] HIPAA (Healthcare)

- [ ] SOX (Financial Reporting)

- [ ] PCI DSS (Payment Card Industry)

- [ ] Industry-specific regulations (specify): _______________

- [ ] Government/Public sector requirements

- [ ] No specific regulatory requirements identified

3.2 Ethical Considerations Screening

Does your project involve any of the following?

- [ ] Personal/sensitive data processing

- [ ] Automated decision-making affecting individuals

- [ ] Potential for algorithmic bias or discrimination

- [ ] Facial recognition or biometric data

- [ ] Vulnerable populations (children, elderly, disabled)

- [ ] High-stakes decisions (hiring, lending, healthcare, criminal justice)

- [ ] Content generation or manipulation

- [ ] Surveillance or monitoring capabilities

3.3 Risk Factors

Identify potential risk areas:

- [ ] Data privacy/security concerns

- [ ] Reputational risk from AI decisions

- [ ] Regulatory compliance challenges

- [ ] Technical complexity/failure risk

- [ ] User acceptance/adoption risk

- [ ] Vendor dependency risk

- [ ] Skills/capability gaps

- [ ] Budget/timeline constraints

Section 4: Resource Requirements

4.1 Timeline Expectations

- [ ] Under 3 months

- [ ] 3-6 months

- [ ] 6-12 months

- [ ] Over 12 months

- [ ] Timeline flexible/unknown

4.2 Budget Range

- [ ] Under £50k

- [ ] £50k – £250k

- [ ] £250k – £1M

- [ ] Over £1M

- [ ] Budget not yet defined

4.3 Team Commitment

Estimated team size needed:

- [ ] 1-3 people

- [ ] 4-8 people

- [ ] 9-15 people

- [ ] Over 15 people

Section 5: Gateway Decision Matrix

Based on your responses above, this gateway will route your project to the appropriate discovery framework:

Routing Criteria:

Minimum Requirements for AI Discovery:

✅ Required for AI Discovery Track:

- Project involves AI/ML capabilities (Section 1.2)

- Strategic priority: Medium or above

- Ethical considerations identified OR high-risk factors present

- Committed team and stakeholder engagement

- Clear business problem statement

❌ Exclusion Criteria:

- Purely technical infrastructure projects

- Simple automation without learning capabilities

- Projects with no data requirements

- Proof-of-concept with no implementation intent

Section 6: Submission Summary

Project Title:

[Enter descriptive project title]

Submission Checklist:

- [ ] All relevant sections completed

- [ ] Stakeholders identified and engaged

- [ ] Initial budget/timeline estimates provided

- [ ] Regulatory requirements understood

- [ ] Risk factors acknowledged

Recommended Next Steps:

Based on your responses, your project is classified as:

- [ ] AI Discovery Required → Proceed to Stage 1: First Look (3-5 days)

- [ ] Digital Discovery → Standard digital discovery process

- [ ] Technical Discovery → Infrastructure/systems focus

- [ ] Process Discovery → BPM/automation focus

- [ ] Hybrid Discovery → Custom multi-track approach

- [ ] Not Ready → Address prerequisite gaps before resubmission

Gateway Approval:

Discovery Lead Signature: _______________ Date: _______________ Business Sponsor Approval: _______________ Date: _______________

Supporting Documentation Required

For projects proceeding to AI Discovery, prepare the following:

- Detailed problem statement and success criteria

- Data inventory and access permissions

- Stakeholder contact list and RASCI matrix

- Preliminary risk assessment

- Budget approval documentation

- Compliance/legal review (if applicable)