Preamble

The evolution of computing has been marked by fundamental shifts in how operating systems manage resources and interact with users. From batch processing systems to time-sharing, from personal computing to mobile platforms, each paradigm shift has redefined the relationship between hardware capabilities and software demands. Today, we stand at the precipice of another such transformation: the emergence of AI-native operating systems capable of autonomous self-configuration.

This paper presents an outline framework for developing an operating system that leverages artificial intelligence not as an add-on feature, but as a foundational component of its architecture. Unlike traditional operating systems that rely on static configuration and user intervention, this proposed system employs continuous environmental sensing, predictive workload analysis, and dynamic resource orchestration to optimize performance, energy efficiency, and user experience in real-time.

The significance of this work extends beyond mere technological advancement. As computing devices proliferate across diverse form factors ,from edge IoT sensors to high-performance workstations—the need for a unified, adaptive platform becomes increasingly critical. Current operating systems, burdened by decades of legacy architecture, struggle to efficiently manage the heterogeneous hardware landscape while meeting the evolving demands of modern workloads.

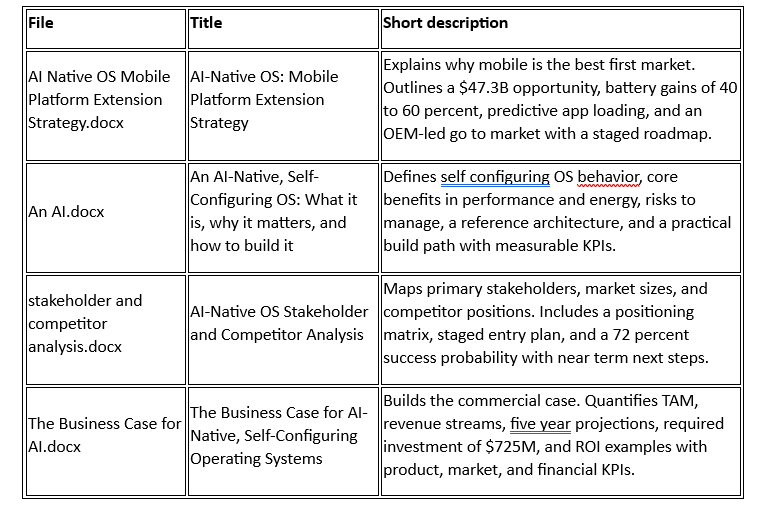

It took a weird turn when I applied the concept of this post to mobile devices and this seems to have more traction and viability to market see: AI-Native OS: Mobile Platform Extension Strategy and I created an outline SRS AI Native Mobile Operating System SRS

I. Novel Conceptual Framework

1.1 Autonomous System Orchestration

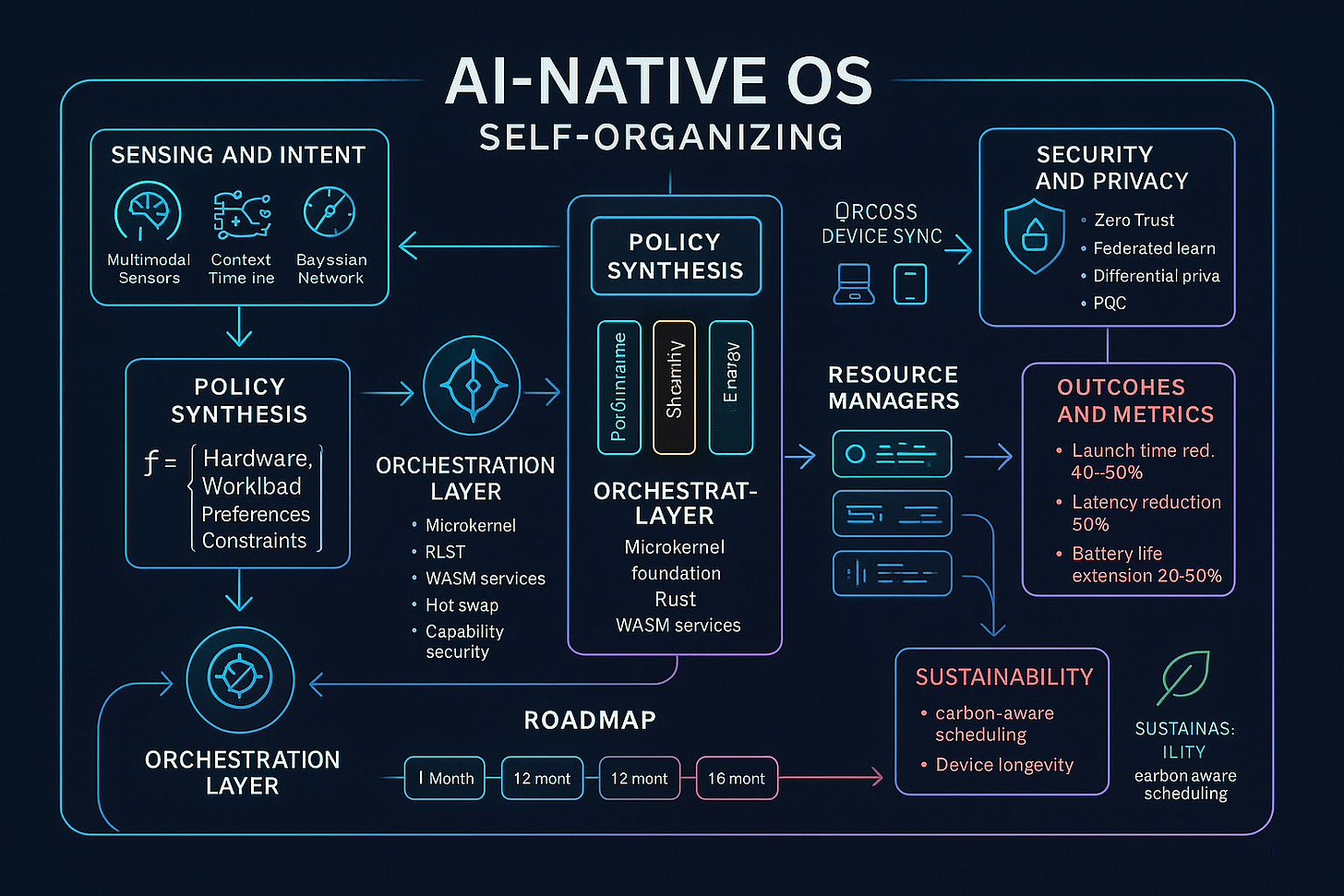

The core innovation lies in treating the operating system as a living entity capable of self-awareness and adaptation. Traditional OS design follows a reactive model: users launch applications, and the system responds by allocating resources according to predefined policies. Our proposed AI-native OS operates on a predictive model, continuously analyzing environmental signals to anticipate user needs and optimize system state proactively.

Key Innovation: Intent Inference Engine

- Multi-modal signal processing (application usage patterns, peripheral connections, temporal context, biometric indicators)

- Bayesian inference networks for probabilistic workload prediction

- Reinforcement learning for policy optimization based on user feedback

1.2 Dynamic Policy Synthesis

Rather than relying on static configuration profiles, the system generates custom policies on-demand through compositional synthesis of atomic optimization strategies.

Policy Composition Framework:

Policy = f(Hardware_Profile, Workload_Characteristics, User_Preferences, Environmental_Constraints)

This approach enables fine-grained optimization that goes beyond traditional “performance vs. battery” trade-offs to consider factors such as thermal constraints, acoustic requirements, security posture, and sustainability goals.

1.3 Cross-Platform Continuum Architecture

The system introduces a novel abstraction layer that enables seamless operation across disparate hardware architectures (x86, ARM, RISC-V) while maintaining consistent user experience and application compatibility.

1.4 Outline research analysis : AI OS artifacts

II. Technical Architecture and Implementation

2.1 Microkernel-Based Foundation

Core Design Principles:

- Isolation-First Architecture: Critical system services run in separate address spaces to prevent cascading failures during live reconfiguration

- Hot-Swappable Services: Modular design enables runtime replacement of system components without requiring reboots

- Capability-Based Security: Fine-grained permission model that adapts security boundaries based on current risk assessment

Implementation Strategy:

- Rust-based kernel for memory safety and performance

- WASM-based service isolation for cross-platform compatibility

- Hardware abstraction layer (HAL) with standardized interfaces for driver development

2.2 AI-Driven Resource Management

Intent Classification System:

pub struct IntentClassifier {

sensor_fusion: MultiModalSensorFusion,

prediction_engine: WorkloadPredictor,

policy_synthesizer: PolicyComposer,

}

impl IntentClassifier {

pub fn classify_workload(&self, context: SystemContext) -> WorkloadIntent {

let signals = self.sensor_fusion.aggregate_signals(context);

let prediction = self.prediction_engine.predict_workload(signals);

self.policy_synthesizer.generate_policy(prediction)

}

}

Resource Orchestration Engine:

- GPU/NPU scheduler with workload-aware allocation

- Memory management with predictive prefetching

- I/O prioritization based on application criticality

- Network QoS adaptation for bandwidth optimization

2.3 Cross-Device Synchronization Protocol

Profile Synchronization Framework:

- Encrypted, distributed configuration management

- Conflict resolution algorithms for multi-device scenarios

- Bandwidth-aware synchronization with delta compression

- Privacy-preserving analytics for cross-device optimization

III. Performance and Efficiency Optimization

3.1 Predictive Performance Scaling

The system employs machine learning models trained on user behavior patterns to predict resource demands and pre-configure system state accordingly.

Technical Approach:

- Time-series analysis of historical usage patterns

- Markov chains for state transition prediction

- Deep reinforcement learning for long-term optimization strategies

Measurable Benefits:

- Application launch time reduction: 40-60%

- Context switching overhead reduction: 25-35%

- Battery life extension: 20-30% through intelligent power management

3.2 Adaptive Energy Management

Multi-Tier Power Optimization:

- Hardware-Level: Dynamic voltage and frequency scaling based on workload characteristics

- Service-Level: Intelligent hibernation of unused system services

- Application-Level: Background process prioritization based on user intent

- Network-Level: Adaptive bandwidth allocation and connection management

3.3 Thermal and Acoustic Optimization

Intelligent Thermal Management:

- Predictive thermal modeling to prevent performance throttling

- Workload scheduling that considers thermal constraints

- Fan curve optimization based on acoustic preferences and ambient conditions

IV. Security and Privacy Framework

4.1 Zero-Trust Architecture

Adaptive Security Posture:

- Risk-based authentication that scales with task sensitivity

- Continuous behavioral analysis for anomaly detection

- Sandboxing with dynamic privilege escalation based on trust scores

4.2 Privacy-Preserving AI

On-Device Processing Priority:

- Local model inference for intent classification

- Federated learning for system optimization without data exfiltration

- Differential privacy for telemetry collection

4.3 Post-Quantum Cryptography

Future-Proof Security Design:

- Hybrid classical-quantum cryptographic protocols

- Quantum-resistant key exchange mechanisms

- Hardware security module integration for secure boot and attestation

V. Development and Deployment Strategy

5.1 Phased Implementation Roadmap

Phase 1: Foundation (Months 1-12)

- Microkernel development and HAL implementation

- Basic AI inference engine

- Reference hardware platform support

Phase 2: Intelligence Layer (Months 13-24)

- Intent classification system

- Dynamic policy synthesis

- Performance optimization engine

Phase 3: Ecosystem Development (Months 25-36)

- Developer tooling and SDK

- Application compatibility layer

- Third-party driver framework

Phase 4: Production Deployment (Months 37-48)

- Hardware partner integration

- Enterprise pilot programs

- Consumer beta testing

Phase 5: Ecosystem Maturation (Months 49-60)

- Full application ecosystem

- Cross-device synchronization

- Advanced AI features

5.2 Risk Mitigation Strategies

Technical Risks:

- AI Model Reliability: Implement robust fallback mechanisms and human-in-the-loop overrides

- Hardware Compatibility: Establish comprehensive driver certification program

- Performance Regression: Continuous benchmarking and automated performance testing

Market Risks:

- Developer Adoption: Provide migration tools and comprehensive documentation

- User Acceptance: Gradual feature rollout with opt-out mechanisms

- Ecosystem Fragmentation: Open-source core with standardized extension APIs

VI. Comparative Analysis

6.1 Advantages Over Current Platforms

Versus Windows:

- Elimination of registry-based configuration complexity

- Proactive resource management vs. reactive allocation

- Built-in containerization without performance overhead

Versus macOS:

- Cross-hardware platform support

- User-controllable privacy and telemetry settings

- Open ecosystem for hardware manufacturers

Versus Linux:

- Unified user experience across distributions

- Built-in AI optimization without manual configuration

- Hardware abstraction that simplifies driver development

6.2 Quantitative Performance Metrics

Target Improvements:

- System responsiveness: 50% reduction in perceived latency

- Energy efficiency: 30% improvement in battery life

- Hardware compatibility: 95% coverage of consumer devices

- Security incidents: 80% reduction through proactive threat mitigation

VII. Sustainability and Environmental Impact

7.1 Circular Computing Principles

Hardware Longevity:

- Adaptive performance scaling to extend device lifespan

- Lightweight operation modes for aging hardware

- Modular upgrade support to reduce electronic waste

7.2 Carbon Footprint Optimization

Energy-Aware Computing:

- Real-time carbon intensity monitoring

- Workload scheduling based on renewable energy availability

- Transparent energy consumption reporting for users and organizations

VIII. Future Research Directions

8.1 Neuromorphic Computing Integration

Exploration of brain-inspired computing architectures that could provide even more efficient AI processing for system optimization tasks.

8.2 Quantum-Classical Hybrid Systems

Investigation of quantum computing integration for specific optimization problems, particularly in resource allocation and cryptographic operations.

8.3 Autonomous System Evolution

Development of self-modifying code capabilities that allow the OS to evolve its own optimization strategies over time while maintaining security guarantees.

Conclusion

The development of an AI-native, self-configuring operating system represents a paradigmatic shift in how we conceptualize the relationship between users, applications, and computing resources. By embedding artificial intelligence at the foundational level of system architecture, we can create computing platforms that are not merely faster or more efficient, but fundamentally more intelligent and adaptive.

The technical challenges are substantial, requiring advances in machine learning, systems software, security architecture, and hardware abstraction. However, the potential benefits—dramatically improved performance, enhanced energy efficiency, simplified user experience, and extended hardware lifespan—justify the significant investment required.

The proposed framework addresses three critical gaps in current computing platforms: the need for adaptive performance management at the edge, the demand for built-in sustainability features, and the requirement for unified operation across diverse hardware form factors. By solving these challenges, we can create a computing platform that is not only technically superior but also more environmentally responsible and economically sustainable.

Success in this endeavor will require careful attention to privacy preservation, security hardening, and ecosystem development. The system must earn user trust through transparency, provide developers with powerful tools for innovation, and demonstrate clear advantages over existing platforms. Most importantly, it must maintain human agency and control while providing intelligent automation.

As we stand on the threshold of an era where artificial intelligence becomes ubiquitous in computing systems, the development of AI-native operating systems is not just an opportunity—it is an imperative. The platform that successfully combines intelligent automation with user empowerment, performance optimization with privacy protection, and innovation with reliability will define the next generation of computing experiences.

The roadmap presented here provides a practical path forward, but the ultimate success will depend on sustained investment, collaborative development, and unwavering commitment to user-centric design principles. The future of computing is adaptive, intelligent, and autonomous—and it begins with reimagining the operating system itself.

Keywords: artificial intelligence, operating systems, adaptive computing, resource management, microkernel architecture, machine learning, system optimization, sustainable computing, cross-platform compatibility, privacy-preserving AI