Preamble

You are hunting real edges, not hype. The edge here is portability. The next breakout in AI infrastructure might be sovereign, containerized compute that ships like cargo, lands anywhere with power, and reaches revenue fast. Think of it as a relocatable processing plant for compute. This is related to the Project Prometheus documentation for Ideas trigger 14: The Next AI Unicorn might be a Sovereign, Portable Processing Plant for Compute

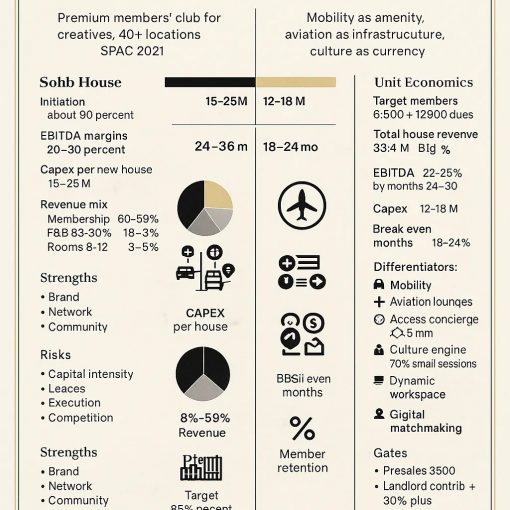

What it is

A sovereign, portable processing plant is a factory-built, ISO-containerized data pod that arrives tested, plugs into medium voltage power, and exposes a GPU fabric as a service. Each pod forms a sealed operational unit with power, liquid cooling, security, and orchestration. Units daisy chain into clusters and campuses as demand grows.

As usual see details : Modular Approach to Building Scalable AI Infrastructure for Project Prometheus . Note: Lessons from Crypto Mining vs. AI Data Centers: Striking Similarities and Key Differences . Traditional Vs Modular approches show traditional is better but conditional on the avalability of affordable electricity (and supporting infrastructure),better connectivity, and managable rate of changing AI technology (and AI supply chain stability) see: Modularity vs Traditional

Why this beats fixed hyperscale

Time to capacity drops from 36 to 48 months to 8 to 10 months, with the option to parallelize deployments. Capex falls to roughly 3 to 4.2 million dollars per MW, a 30 to 40 percent reduction versus traditional builds. If a country or contract turns hostile, you can disconnect, ship, and reinstall within roughly 60 to 90 days.

The winning unit: the Twin Pod at 2 MW

A two-container architecture balances efficiency and true portability.

- Compute module: 1× ISO 40-foot High Cube, target 20 tons, about 8 GPU racks, 320 to 360 GPUs, 1.5 to 2 MW load. Quick-connect power, coolant, and fiber.

- Infrastructure module: 1× ISO 40-foot High Cube, about 12 tons, 2.5 MW power and liquid cooling, UPS, monitoring, and robotics support.

- Assembly: 4 to 7 days on site. Ships on standard routes, no heavy-haul paperwork. Weight margins preserve portability for future GPU generations.

Cold, temperate, hot: one product, three cooling variants

Target PUE ranges from 1.10 to 1.30 using climate-tuned liquid cooling, free cooling where available, and closed-circuit towers for hot, humid regions. Water use stays within 0.05 to 0.25 L per kWh depending on climate.

Unit economics that work at Series A scale

A 5 MW pod costs about 15 to 21 million dollars. At 80 percent utilization and 1.50 dollars per GPU hour, a phased 50 MW rollout models to a 35 to 45 percent IRR by Year 5, while preserving relocation optionality. Energy, labor, and maintenance opex drop 20 to 50 percent versus fixed sites, driven by site arbitrage and lights-out ops.

Risk controls built into the form factor

- Power scaling: Deploy in 10 to 20 MW blocks aligned to substation steps or use containerized substations. Cuts stranded capacity and halves per-MW substation cost.

- Network fabric: Spine-leaf inside pods, spine-spine across pods, plus EVPN overlays. This trims switching capex by 60 to 70 percent at campus scale with a small latency tradeoff.

- Vendor risk: OCP-aligned designs, dual-source GPUs, and multi-vendor cooling keep pricing stable and lead times sane.

- Regulatory spread: Pre-certify a global reference design, carry a regional variants library, and favor export or special economic zones. Timeline compression can reach 50 percent across two countries.

Go-to-market path you can run now

- Phase 1, months 0 to 8: Build and deploy one reference Twin Pod. Validate integration, telemetry, and sovereign security.

- Phase 2, months 9 to 18: Roll out 3 to 5 pods across two or three countries, behind-the-meter where energy is 0.02 to 0.07 dollars per kWh. Prove orchestration across borders.

- Phase 3, months 19 to 36: Enter volume production. Achieve price parity with hyperscale at around 20 pods while retaining mobility.

Where this wins first

- Countries with cheap power and slow grid interconnect queues

- Markets that need custody, auditability, and crypto-shredding for compliance

- Workloads that value proximity to energy, data sources, or sovereign boundaries

All three align with the modular, secure, lights-out pod design.

Conclusion

A sovereign, portable processing plant for compute is not a metaphor. It is a shippable, revenue-ready product with known costs, known timelines, and a clear expansion path. The Twin Pod at 2 MW is the practical minimum that stays portable, efficient, and financeable. That is why the next AI infrastructure unicorn will ship in containers.