Preamble:

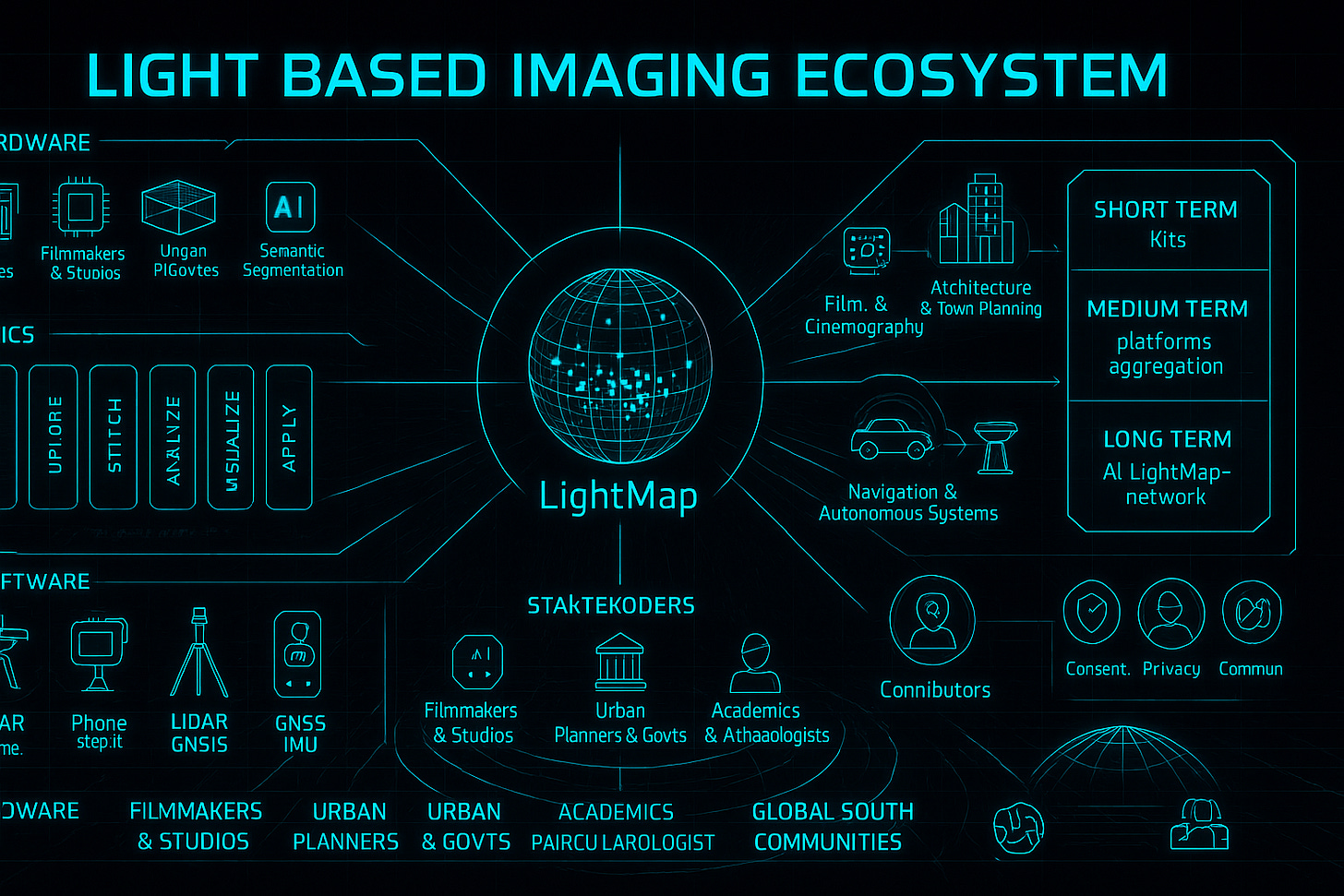

Can you build a shared imaging platform that fuses LiDAR, light field imaging, volumetric capture, and AI driven reconstruction across phones, drones, and modular kits, then publish searchable 3D data for film, planning, navigation, archaeology, gaming, and global development. The workflow is simple and rigorous, capture, upload, stitch, analyse, visualize, apply, with automated QA, redaction, and open standards for GIS and XR. Start with Filming ( Film TV , social media) and then Gaming and navigation then archaeology and cultural preservation as the beachhead, then expand to urban planning and other domains. Ship modular kits in the short term, build aggregation and visualization platforms in the medium term, and grow an AI powered LightMap in the long term. Ethics lead at every step, consent and provenance are recorded, faces and plates are blurred, sensitive areas are geofenced, and contributors are credited and paid with clear licensing.

Light-Based Imaging Ecosystem: Conceptual Overview:

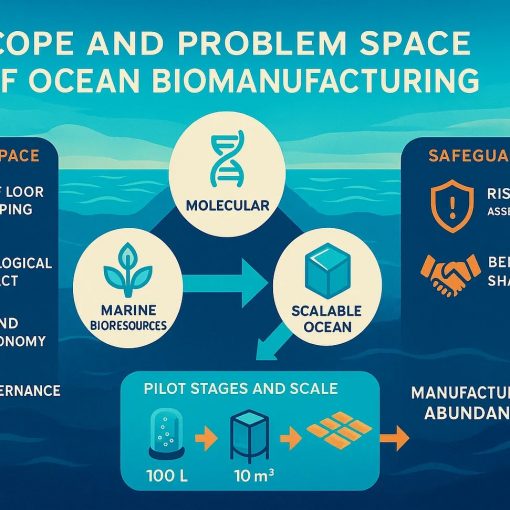

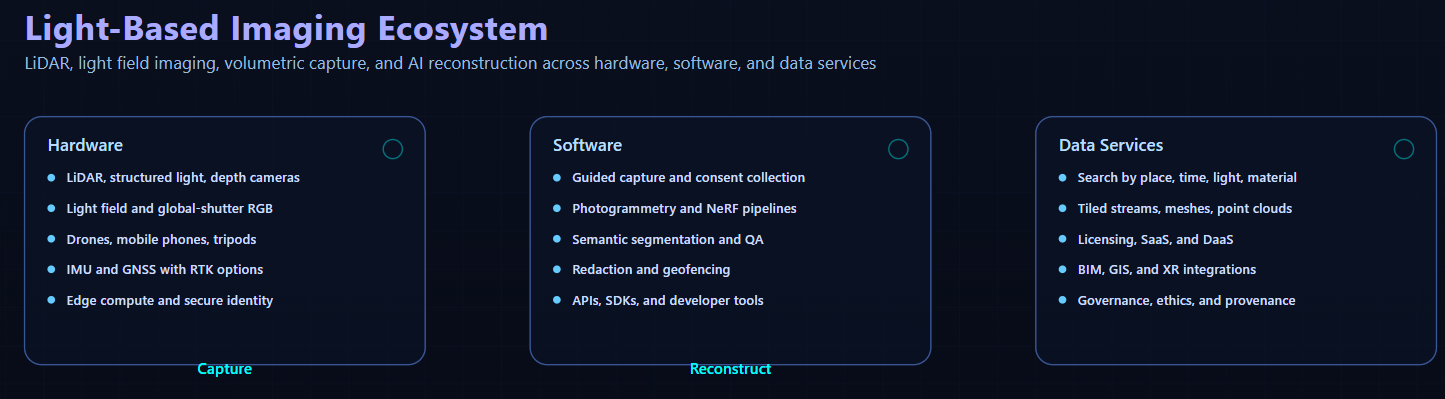

I am envisioning a multi-domain innovation platform built around LiDAR, light field imaging, volumetric capture, and AI-driven reconstruction. This ecosystem spans hardware, software, and data services, with applications in:

- Film & Cinematography

- Architecture & Town Planning

- Navigation & Autonomous Systems

- Archaeology & Cultural Preservation

- Gaming & Virtual Worlds

- Global Development & Accessibility

You cannot plan what you cannot see. Most of the world still lacks high quality 3D data. That gap slows progress and hides culture. We can change that with light-based imaging, community capture, and ethical AI.

As usual outline Analysis located here Artifacts this idea is so raw its worth reconceptualising

The definitions of all the terms used are in the appendices below:

Core imaging and 3D capture : LiDAR. Photogrammetry. NeRF, Neural Radiance Fields. Light field, plenoptic imaging. Volumetric video capture. LED virtual production. Semantic segmentation. Volumetric, immersive map concept, LightMap. Hardware and sensing kits: Kit types. Depth sensors. RGB capture. IMU and GNSS. Edge compute. Design goals. Data products, formats, and delivery Asset, scan, reconstruction. Common 3D and GIS formats. OGC and GIS services. XR and AEC integration.

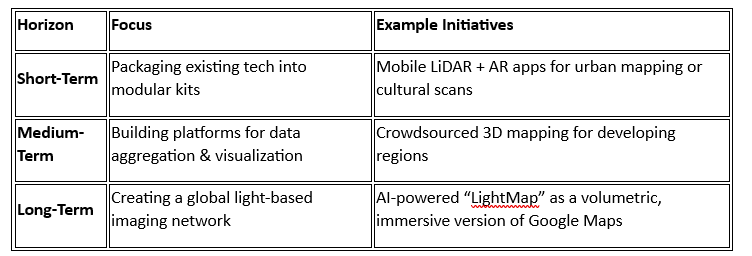

🚀 Opportunity Mapping: Short, Medium & Long-Term Horizons

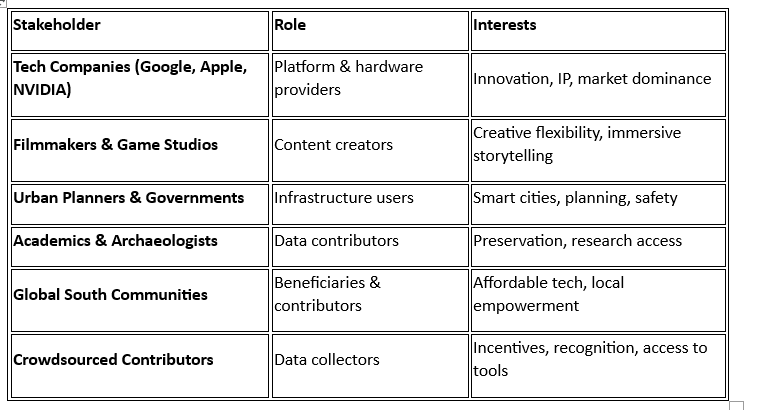

🧩 Stakeholder Identification & Analysis

🔧 People–Process–Technology Framework

- People: Creators, technicians, planners, educators, citizen scientists

- Process: Capture → Upload → Stitch → Analyze → Visualize → Apply

- Technology:

- LiDAR, light field cameras, drones, mobile phones

- AI (NeRF, photogrammetry, semantic segmentation)

- AR/VR platforms, cloud databases, spatial computing

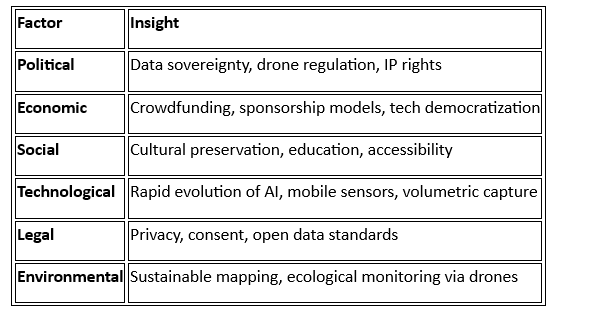

🌍 Strategic Analysis

PESTLE

🌐 Speculative Futures & Novel Concepts

- LightMap: A volumetric, AI-enhanced global map stitched from crowdsourced LiDAR and light field data

- Scan-to-World: Mobile kits for scanning environments, creating immersive AR/VR spaces

- Cultural Capsules: 3D-preserved heritage sites accessible via mobile or headset

- Drone Grid Mapping: Autonomous drones using sat-nav and LiDAR to build terrain models

- Ground + Sky Fusion: Combining ground-penetrating radar with aerial scans for archaeological surveys

- Crowdsourced Imaging Network: Sponsored cameras deployed globally to collect and share data

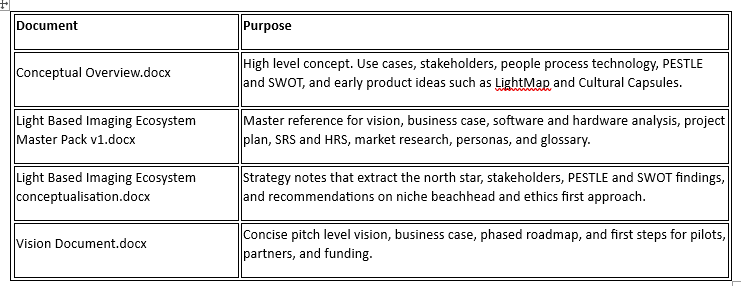

📄 Suggested research and documents for further development of concept

- Vision Document: Articulating the long-term impact and guiding principles

- Innovation Roadmap: Phased development across domains and technologies

- Business Case: Justifying investment with ROI, impact, and scalability

- Speculative Futures Brief: Exploring disruptive potential and ethical implications

- Modular Product Catalog: Hardware/software bundles for different use cases

- Stakeholder Engagement Plan: Strategies for onboarding and collaboration

Conclusion

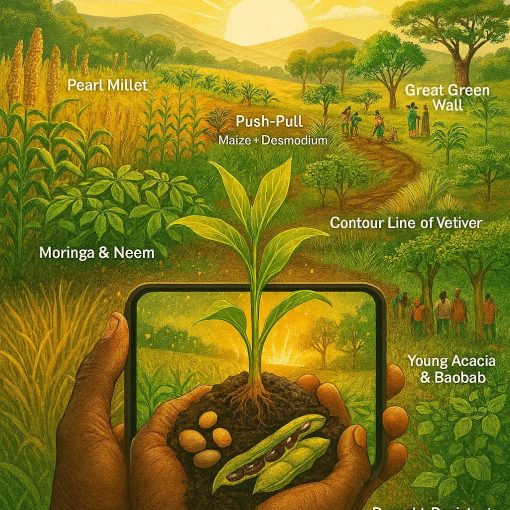

We now carry sensors that read depth, colour, and motion. Phones scan rooms. Drones map fields. New AI models rebuild entire scenes from a handful of photos. Put these pieces together with a simple idea. Reward people for capturing the places they know best, then use AI to turn those captures into a living model of the world.

Start where the need is sharp and privacy risk is low.

Film and media and gaming \ navigation could be a start. Archaeology and cultural heritage fit. Many sites lack current surveys. Local teams face travel and funding limits. A contributor can scan a wall relief with a phone and a compact depth sensor. The platform runs reconstruction, tags the mesh, and publishes a verified asset. A museum downloads the result for conservation. A school uses it to teach history with context and scale.

Urban planning comes next. Planners want to test traffic changes, flood risk, and lighting before concrete is poured. They need current streetscapes and interiors. The platform unifies crowd capture with drone flights. AI segments roads, signs, and vegetation. A digital twin appears that planners can filter by time and condition.

Media teams gain a new location layer. Need a market street at dawn after a rainstorm. Search by light, material, and era. License the dataset, or sponsor a local crew to scan the real thing.

Ethics must lead. The platform has a clear charter. No scans near private homes without consent. Automatic blurring of faces and plates. Strong local control of data sharing. Contributors get paid and credited. Communities decide what stays public.

This is not a map for machines alone. It is a new common for people who make and care for places. When you give people the tools to capture their world, you help everyone see it.

Appendices

Tech glossary

Core imaging and 3D capture

- LiDAR. Depth sensing that measures distance with laser pulses. Used for mapping, navigation, archaeology, and urban scans.

- Photogrammetry. 3D reconstruction from overlapping 2D images. Outputs meshes or point clouds.

- NeRF, Neural Radiance Fields. AI method that learns a scene from photos, then synthesizes new views for navigable 3D.

- Light field, plenoptic imaging. Captures light direction as well as color and intensity, which enables refocus and depth control in post.

- Volumetric video capture. Many synchronized cameras record a subject from all angles, then reconstruct a 3D performance you can view in AR or VR.

- LED virtual production. Real environments rendered on LED walls for in-camera VFX, with realistic on-set lighting.

- Semantic segmentation. Automatic labeling of pixels or 3D points by class to power search, masking, and analytics.

- Volumetric, immersive map concept, LightMap. A proposed global, AI assisted, time aware 3D index built from crowdsourced scans.

Hardware and sensing kits

- Kit types. Phone plus depth module, drone kit with LiDAR or structured light, tripod stereo or plenoptic rig, backpack mobile mapper.

- Depth sensors. Time of flight, structured light, or solid state LiDAR, selected by range and accuracy needs.

- RGB capture. Global shutter, HDR, and low light performance improve reconstruction quality.

- IMU and GNSS. Motion and location sensors for pose estimation, with options like SBAS and RTK for higher accuracy.

- Edge compute. On device prechecks, compression, and optional embedded GPU for field preview and resilience.

- Design goals. Low cost, rugged, hot swappable power, offline capture, secure device identity.

Data products, formats, and delivery

- Asset, scan, reconstruction. Raw frames and logs form an asset, grouped into a scan, then reconstructed into mesh, point cloud, textures, and metadata.

- Common 3D and GIS formats. OBJ, PLY, glTF, LAS or LAZ, GeoTIFF, CityGML for storage and exchange.

- OGC and GIS services. WMS, WFS, OGC 3D Tiles, and GeoParquet support web mapping and large scale tiling.

- XR and AEC integration. Unity, Unreal, WebXR pipelines, plus IFC and BIM exports for planning and construction.

- Catalog and search. Find data by place, time, material, and light, with previews on a map and in 3D.

Processing pipeline and quality

- Guided capture. Mobile app prompts for coverage, overlap, and exposure, and captures consent artifacts.

- Reconstruction. Photogrammetry and NeRF pipelines turn images and depth into 3D scenes.

- Automated QA. Coverage, sharpness, and drift checks gate publishing and payouts.

- Redaction and geofencing. Faces, plates, and sensitive areas are filtered or blurred before release.

- Throughput and latency KPIs. Track GPU hours per job, accuracy versus ground truth, and search latency at high percentiles.

Privacy, security, and governance

- Consent and provenance. Every dataset carries evidence of consent, scope, and licensing, with field level encryption of PII.

- Differential privacy. Adds statistical noise for safer public releases while preserving analytic value.

- Security controls. SOC 2 alignment, encryption at rest and in transit, HSM backed keys, secure boot, attestation, and signed updates.

- Ethical charter. Do not capture private spaces without proof of consent, respect sacred sites, redact sensitive features, share value locally.

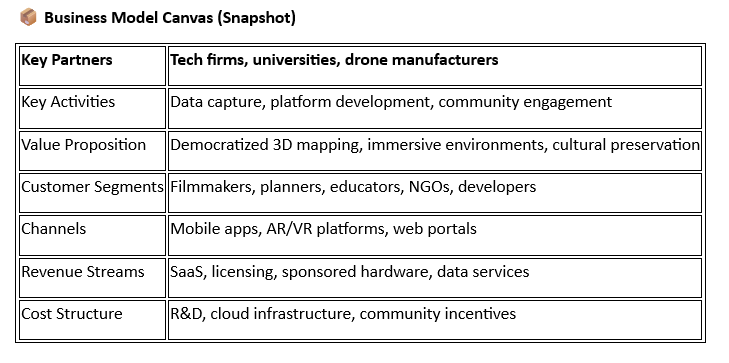

Platform, roles, and business model

- User roles. Contributors capture and upload, customers search and license, reviewers run QA and ethics checks, admins manage policy and partners.

- APIs and SDKs. Access for retrieval, analytics, and integration with dev tools and GIS.

- SaaS and DaaS. Software for reconstruction and QA, plus data subscriptions and project based data requests.

- Contributor rewards. Payouts tied to quality, coverage, and demand, with balances and withdrawal in the app.

- Roadmap phases. MVP, Beta, and Scale, with targets for contributors, scans, processing time, and paying customers.

- RACI and KPIs. Clear ownership across product, engineering, ops, legal, and partners, with metrics like cost per qualified scan and time to fulfill a request.

Strategy and use cases

- People, process, technology. Capture, upload, stitch, analyze, visualize, apply, with creators, technicians, planners, educators, and citizen scientists.

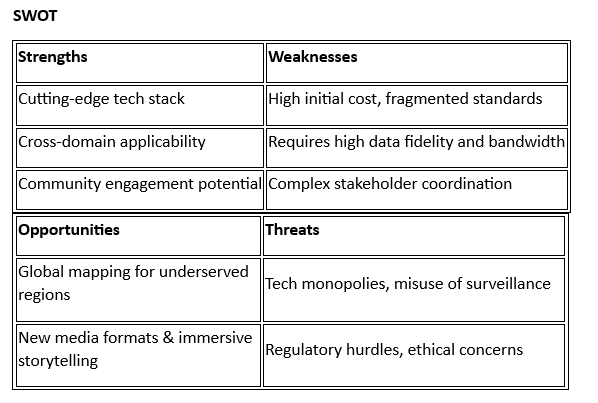

- PESTLE and SWOT. Evaluate political, economic, social, technological, legal, and environmental factors, then map strengths, weaknesses, opportunities, and threats to guide choices.

- Beachhead market. Start with archaeology and cultural preservation to validate tech and ethics, then expand to planning and media.

- Crowdsourced network. Sponsored cameras, drone grids, and bounty tiles to cover areas of interest with local benefit and clear consent.