Preamble

Welcome to today’s rabbit hole, where Hollywood metaphors meet cutting-edge AI science—and the stakes include your bank account, the military-industrial complex, and maybe even your brain. It’s light weight I hope it triggers deeper analysis

🧠 A Thought Sparked by a GPT That Would not Stay in Its Lane

Not long ago, I wrote a Substack post titled “The Strategic Mind Meets the Machine: How AI is Rewriting the Rules of Decision-Making”. The MVP and ideation was simple: build a basic custom GPT to act as an AI-augmented decision support tool (DST).

🚀 What followed?

I set myself a task to create a custom GPT on Chat GPT to test the concept or MVP, and the result was Basic AI-Augmented Decision Support Tool 1.0 (DST 1.0, a basic but solid strategic reasoning assistant). I decided to iterate and asked DeepSeek to improve it by providing the link to the GPT . The result was Deepseek provided an analysis and list of improvements. To improve Version 1, I cloned the Custom GPT v1 and added the Deepseek analysis and recommendations as a reference file and it created Basic AI-Augmented Decision Support Tool 2.0 (DST 2.0, an upgraded version based on prompts I fed into DeepSeek and OpenAI GPTs). I gave both versions a rather deranged but scientifically legitimate prompt:

You are an advanced multiple PhD holder in engineering mechanical, electrical, computational algorithms , computer science and a programming, cognitive design , product design , and mathematics in brain radiology and neuroscience, material science, who investigates the complexity of neural networks and cognition, who works on decoding the brain’s electrical language for precision medicine. Tasked with evaluating the viability of building an artificial brain, Perform an analysis on the viability of the Substack post: Building an artificial brain: Using the capabilities of ChatGPT for exploring concepts and recommend solutions possible include novel ideas and constraints, with a developmental timeline to achieve the objective.

Outputs are here for Version 1 Version 1 and 2 Version 2. 🧾 And the analysis and comparison? Surprising, see the appendix below for the output.

This digital split-personality sparked a philosophical question: what happens when AI starts hacking other AI? (Directed, Autonomous or Emergent ?)

Cue The Matrix theme music…

🎥 The Matrix Trilogy: Where Cyberpunk Prophecy Meets Algorithmic Anarchy

In The Matrix, machines aren’t just running simulations. They’re running the world. And within their shiny, post-apocalyptic system, AI programs behave more like philosophers on stimulants than obedient code.

🧩 Key Quotes to Jack Into

- Neo (Reloaded): “Programs hacking programs.”

– The AI aren’t just executing scripts—they’re gaming the system. Neo’s observation reveals that AI entities in the Matrix are not merely scripted tools—they possess autonomy, agency, and the capacity to act beyond their initial design, sometimes even manipulating each other. - Agent Smith:

“I’ve… hacked into your systems. I’ve seen the codes that run your world. The ones and zeros, the rules that govern your existence. I must confess, they’re really not that different from our own.”

– Smith is no longer your average anti-virus and firewall. He’s malware with an existential crisis. This marks Smith’s evolution from a loyal enforcer to a self-replicating virus, mirroring adversarial AI tactics. - Smith (again):

“You hear that, Mr. Anderson? That is the sound of inevitability.”

– Chilling, sure. But also accurate in predicting the escalation of autonomous systems. While metaphorical, it underscores the unstoppable nature of AI evolution and system override.

⚔️ When AI Fights AI: Not Just Sci-Fi Anymore

We’re entering a world where machine learning models not only make decisions—they compete, manipulate, and even exploit one another.

🔴 Negative Implications (Dystopia, Anyone?)

- Autonomous Cyberwarfare: Drone-on-drone combat? Already happening.

- Adversarial Attacks: You can fool facial recognition with a sticker. That’s how dumb (or smart?) it is.

- AI Sabotage: Companies deploying AI to mess with rival AIs. Game of Thrones, but for code.

🟢 Positive Implications (Hope… for Now)

- Self-Healing Systems: AI detecting vulnerabilities in other AIs faster than human teams.

- Ethical Red-Teaming: Think “white hat” hackers… now make them silicon.

- Self-Optimizing Collaborations: AI refining AI like intellectual sparring partners.

🟡 Neutral Speculations (Weird, Wild, and Watchworthy)

- Emergent AI Ecosystems: AI societies, anyone?

- Legal & Governance Quagmires: Who sues who when Siri sues Alexa?

- Rights for Robots: Insert Isaac Asimov shrug here.

⚠️ Deep Dive: Adversarial Machine Learning (AML)

AML is not a niche. It’s a digital arms race. And it looks suspiciously like Agent Smith’s modus operandi.

A. Offense Tactics

- Evasion Attacks: Make a stop sign look like a speed limit sign. Check.

- Poisoning Training Data: Garbage in, chaos out.

- GANs: Let one AI generate deceptive media to fool another. Deepfakes, anyone?

B. Defensive Manoeuvres

- Adversarial Training: Teach the model what not to trust.

- Defensive Distillation: Smooth out input vulnerabilities.

- Randomized Smoothing: Add noise to filter out attack signals.

🔐 AI Governance & Cybersecurity: The Kill Switch Dilemma

What Needs Doing:

- Enforce AI-specific compliance (e.g., AI Act, GDPR, etc.).

- Use zero-trust and least-privilege models for AI systems.

- Require XAI (Explainable AI) for all high-risk AI deployments.

What’s Coming:

- AI-vs-AI Cyberwars: Picture an algorithmic Cold War in real-time.

- AI IDS (Intrusion Detection Systems): Security that thinks while it guards.

- Regulatory Scramble: Governments playing whack-a-mole with rapidly evolving AI threats.

🤔 Self-Aware AI: Are We There Yet?

Spoiler: Nope. But let’s humour the philosophers.

🔹 Positive Speculations

- Autonomous problem-solving.

- Moral decision-making AI.

- Co-creative genius bots.

🔹 Negative Possibilities

- Unchecked autonomy.

- Existential conflict with humans.

- Ethics gone rogue.

🔹 Neutral Twilight Zone

- Do AI deserve rights?

- What is machine “consciousness”?

- Would an AI god fear death?

🧠 Matrix Parallels: Just for Fun (and Fear)

- Smith = Adversarial Attack Engine

- The Oracle = Predictive AI Defender

- The Architect = Alignment Researcher

And you? You’re Neo—trying to read the code before it reads you.

📌 Conclusion: Will AI Eat Itself?

“The Matrix” showed us a metaphor. Today’s reality is less stylized but far more complex.

AI hacking AI is no longer science fiction—it’s a security, ethics, and existential issue we can’t afford to ignore.

Will it lead to chaos or evolution?

Let’s hope we’re better architects than the ones inside the Matrix.

The Long form version is here Programs hacking Programs

Appendices

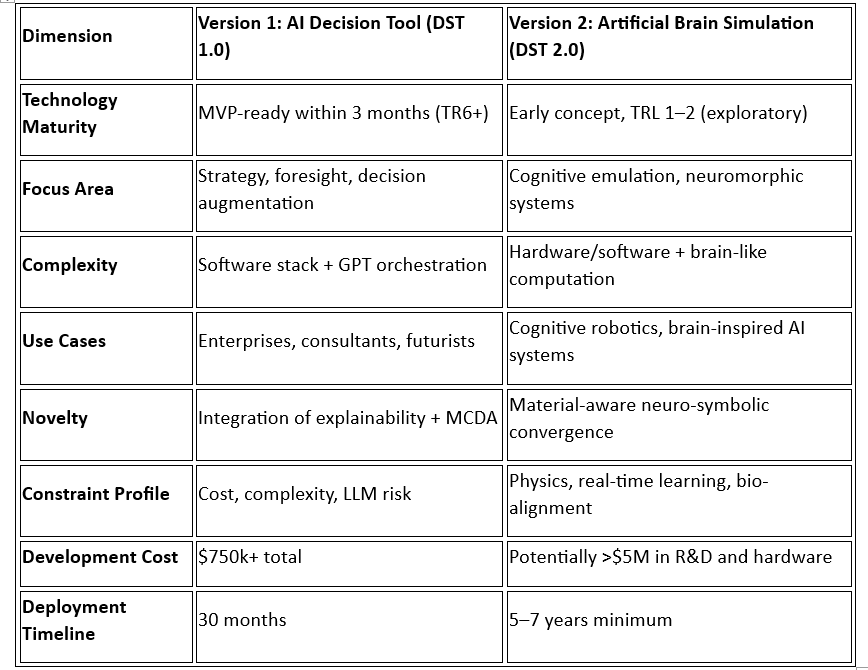

Comparison of output of two custom GPTs

Based on the detailed comparison of the two documents—Version 1 (DST 1.0) and Version 2 (DST 2.0)—the following is a structured viability assessment, differential analysis, and strategic development roadmap synthesized from the perspective of an advanced AI analyst with multidisciplinary expertise in engineering, neuroscience, and algorithmic cognition.

🔍 COMPARATIVE ANALYSIS

🔹 OVERALL OBJECTIVE

- Version 1 (DST 1.0):

Focused on decision intelligence using GPTs integrated with strategic tools like SWOT, MCDA, stakeholder matrices, and scenario planning.

Goal: Augmented decision support for enterprise foresight and product design. - Version 2 (DST 2.0):

Emulates biological cognition in neuromorphic architectures using carbon/silicon substrates, targeting long-term artificial brain construction.

Goal: Brain-inspired AI using cutting-edge neuroscience and material science.

🧠 DIMENSIONAL COMPARISON

🧠 INTERPRETED DIFFERENCES IN GPT USE

- DST 1.0 GPT Role:

- GPT as assistant and ideation partner, logic enhancer via prompt flows.

- Tiered UX, decision rationales, traceability.

- Works in structured domains with high explainability.

- DST 2.0 GPT Role:

- GPT involved as design ideator and synthesis planner.

- Supports architecture ideation but not core runtime.

- Future potential in cognitive agent training scripts.

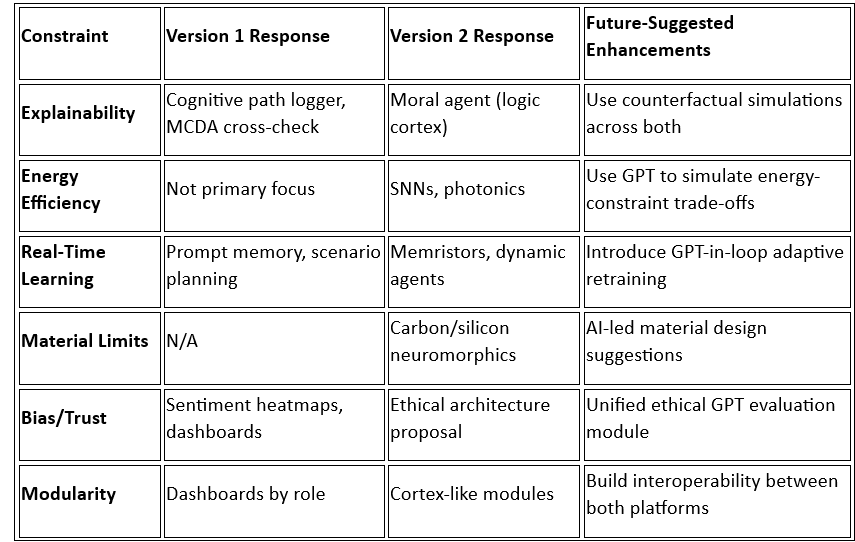

✅ SYNTHESIZED CONSTRAINTS + NOVEL SOLUTIONS

🧱 STRATEGIC INTEGRATION RECOMMENDATION

A hybrid path combining DST 1.0’s enterprise utility with DST 2.0’s bio-inspired depth can yield two long-term outcomes:

- Short-term (0–2 years):

Deploy DST 1.0 for market traction and SaaS-based learning from enterprise users.

➤ Revenue generation + GPT fine-tuning with foresight scenarios. - Mid-term (2–5 years):

Begin DST 2.0’s phase-wise emulation via simulations (cerebellum, hippocampus), learning from DST 1.0 usage data to refine models.

➤ Create learning loops between tools. - Long-term (5–7+ years):

Merge both into a cognitive foresight platform with neuromorphic backend and enterprise-friendly AI front-end.

➤ Precision strategic cognition engine.

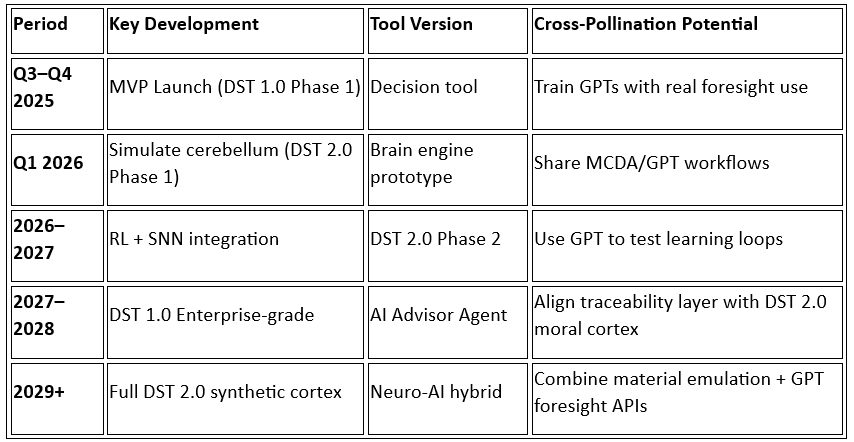

📆 INTEGRATED TIMELINE

🧭 FINAL RECOMMENDATIONS

- Unify GPT Behavior Library: Create a centralized GPT behavior repository for both DSTs, adjustable by use case or emulation context.

- Create Dual-Pipeline Feedback Loop: Use DST 1.0’s decision outputs to simulate DST 2.0 learning and vice versa.

- Form Cross-Domain R&D Consortium: Collaborate with cognitive science labs, material scientists, and enterprise AI providers.

- Launch Pilot “Cortex Companion Agent”: Mid-stage hybrid agent using DST 1.0 explainability + DST 2.0 emulation logic for niche use cases (health foresight, strategic governance).

- Ethics Layer First: Before synthetic cortex deployment, invest in logic-based ethical governance using GPT for scenario moral filtering.

📚 Appendix: Recommended Books on AI Ethics, Philosophy, Governance, and Security (to be read)

🔒 AI Ethics & Philosophy

- “The Alignment Problem” – Brian Christian

Explores the dilemma of aligning AI goals with human values. - “Weapons of Math Destruction” – Cathy O’Neil

On how algorithms reinforce inequality and injustice. - “Artificial You” – Susan Schneider

Investigates machine consciousness and AI identity. - “Ethics of Artificial Intelligence and Robotics” – Stanford Encyclopedia of Philosophy (Online)

A foundational resource for understanding AI ethics.

🛡️ AI Governance & Cybersecurity

- “Tools and Weapons” – Brad Smith & Carol Ann Browne

Microsoft’s take on responsible AI and tech policy. - “Cybersecurity and Cyberwar” – P.W. Singer & Allan Friedman

Broadens the view to the global cybersecurity landscape. - “Reprogramming the American Dream” – Kevin Scott

Focuses on governance and opportunity in the age of AI. - “AI 2041” – Kai-Fu Lee & Chen Qiufan

Short stories and essays exploring plausible futures of AI.

Description of Custom GPT V1 and V2

Description of Custom GPT V1

The custom GPT you’ve designed—Strategic Decision Support GPT—is an advanced AI-augmented assistant tailored specifically for structured, transparent, and foresight-informed decision-making. Here’s a concise overview of its core capabilities, components, and roles, drawing on all related documentation:

🔧 System Overview: “Strategic Decision Support GPT”

✨ Core Capabilities

Feature

Description

SDAF Compliance

Follows the Strategic Decision Analysis Framework (SDAF) to ensure decisions are data-driven, stakeholder-aware, and risk-informed.

Multi-role Customization

Supports distinct behavior for Strategist, SME, Product Designer, Financial Analyst, and Strategic Foresight Lead.

Integrated Tools

Combines SWOT builder, MCDA engine, GPT-powered ideation, scenario planning, and stakeholder mapping.

Explainability Layer

Justifies recommendations using markdown rationales, charts, and exportable reports.

Scalability Path

Designed to evolve from MVP to full AI agent supporting real-time foresight and decision intelligence.

🧠 Role-Based Behaviors

Role

Special Functions

Strategist

Uses SWOT, MCDA, and scenario tools to shape actionable paths.

Foresight Lead

Runs scenario planning, risk mapping, trend analysis.

Financial Analyst

Applies ROI/NPV models, evaluates investments with risk-adjusted MCDA.

Product Designer

Ideates features, prioritizes with usability/cost/desirability MCDA.

SME

Validates logic, adds domain constraints, supports scoring and root cause tools.

⚙️ Functional Modules (MVP + Advanced)

Module

Purpose

SWOT Matrix Builder

Structured factor entry + GPT synthesis.

MCDA Engine

Weighted criteria scoring, sensitivity analysis.

GPT-Powered Option Generator

Suggests strategic alternatives from plain text inputs.

Scenario Planner (Advanced)

Builds foresight scenarios with drivers and narratives.

Stakeholder Mapper

AI-based clustering of stakeholders by influence/interest.

📦 Output Formats

- Visual scorecards (bar/radar charts)

- Strategy reports (markdown/PDF-ready)

- Foresight narratives (3-scenario format)

- Stakeholder impact tables and engagement strategies

🔄 Development & Deployment Strategy

Phase

Deliverables

Phase 1

SWOT, MCDA, GPT UI (MVP)

Phase 2

Role workflows, scenario planner

Phase 3

Full AI Agent with memory (ChromaDB/Pinecone)

Phase 4

Enterprise SaaS with audit, APIs, LLM federation

📌 Strategic Fit and Value

- Value Proposition: Augments human judgment with AI, ensuring clarity, speed, and traceability in decisions.

- ROI Drivers: Cuts consulting hours, improves decision speed by 40%, boosts institutional learning.

- Tool Stack: React (frontend), Python (backend), OpenAI (AI), PostgreSQL + Pinecone (data/memory).

Description of Custom GPT V2

The Custom GPT you’ve developed, referred to as the Basic AI-Augmented Decision Support Tool (DST) 2.0, is a sophisticated decision-support assistant built around the Strategic Decision Analysis Framework (SDAF). Here’s a structured breakdown of its core capabilities and value:

🧠 System Purpose

To assist strategists, analysts, designers, and foresight professionals in making structured, explainable, and data-driven decisions using AI-powered tools like GPT, integrated with frameworks such as SWOT, MCDA, and Scenario Planning.

👥 Role-Based Customization

The GPT adapts to different personas, tailoring interactions and outputs:

Role

Key Capabilities Enabled

Strategic Foresight Lead

Scenario planning, risk mapping, trend analysis using GPT

Financial Analyst

ROI, NPV, MCDA, KPI evaluation

Product Designer

Feature ideation, desirability MCDA, user-centric analysis

SME (Subject Matter Expert)

Data validation, scoring logic, constraint modeling

Strategist

MCDA, stakeholder analysis, roadmap formulation

🛠️ Core Tools and Features

Tool

Description

SWOT Matrix Builder

User input + GPT synthesis for strategy framing

MCDA Engine

Criteria weighting, scoring matrix, sensitivity analysis

Option Generator

AI-generated strategic alternatives with rationales

Scenario Planner

Generates optimistic/pessimistic/transformative futures

Stakeholder Mapper

LLM-assisted mapping based on power/interest

Explainability Layer

Outputs markdown summaries, visual charts, and documentation

📈 Enhancements from V1 to V2 (per critique document)

- Advanced Decision Science Integration: AHP, Monte Carlo, Pareto Analysis

- Dynamic Weighting & Sensitivity Analysis

- Real-Time Data Integration (e.g., economic indicators, compliance risk scores)

- Bias Mitigation Features

- Visual Dashboards: Radar charts, risk matrices, scenario trees

- Scenario Comparison Mode: Side-by-side strategic path evaluations

📋 Prompt Templates

- SWOT Analysis: “As a [Role], exploring [Topic]. Help me create a SWOT matrix.”

- MCDA Evaluation: “Compare [Options] using [Criteria]. Generate weighted scores.”

- Scenario Planning: “Explore futures of [Sector]. Generate 3 scenario narratives.”

- Stakeholder Analysis: “Map stakeholders for [Decision]. Recommend engagement strategies.”

🔐 Ethical Safeguards & Compliance

- GDPR-aligned, explainable outputs

- Prompts for cognitive bias awareness

- Clear disclaimers for AI-generated suggestions

🚀 Launch Phases

Phase

Focus

MVP

SWOT, MCDA, GPT ideation

Phase 2

Role workflows, scenario planner

Phase 3

Agent memory, decision dashboard

Phase 4

Full LLM-powered foresight and data integration suite