Preamble

This edition of Ideas Trigger# 9 explores the ARIA (Home ) Programme; Mathematics for Safe AI( Mathematics for Safe AI )and its active subprogramme, Safeguarded AI (Safeguarded AI) which is still open for funding. Key dates: Phase 1 ‘Late’ applications close 17 August 2025. Phase 2 opens 25 June and closes 1 October 2025. The focus here is on a practical question: what if AI was safe enough to manage critical infrastructure like energy grids or implemented in a scaled deployment having considered safety, ethics and risks?

This idea triggers series spotlights edge-of-field Ideas, technology frameworks and AI trends. Each post offers a starting point for new ventures—less a business plan, more a launchpad for urgent, high-impact innovation. As part of this project, I contributed as an “Ideas Broker” by examining the Safeguarded AI Framework Safe Guarding AI Framework . Rather than analysing the mathematical and computational core, I looked at its real-world meaning and potential through the eyes of external stakeholders—governments, operators, regulators, and users—who will shape or challenge how such systems are adopted and scaled. Please visit ARIA and contribute.

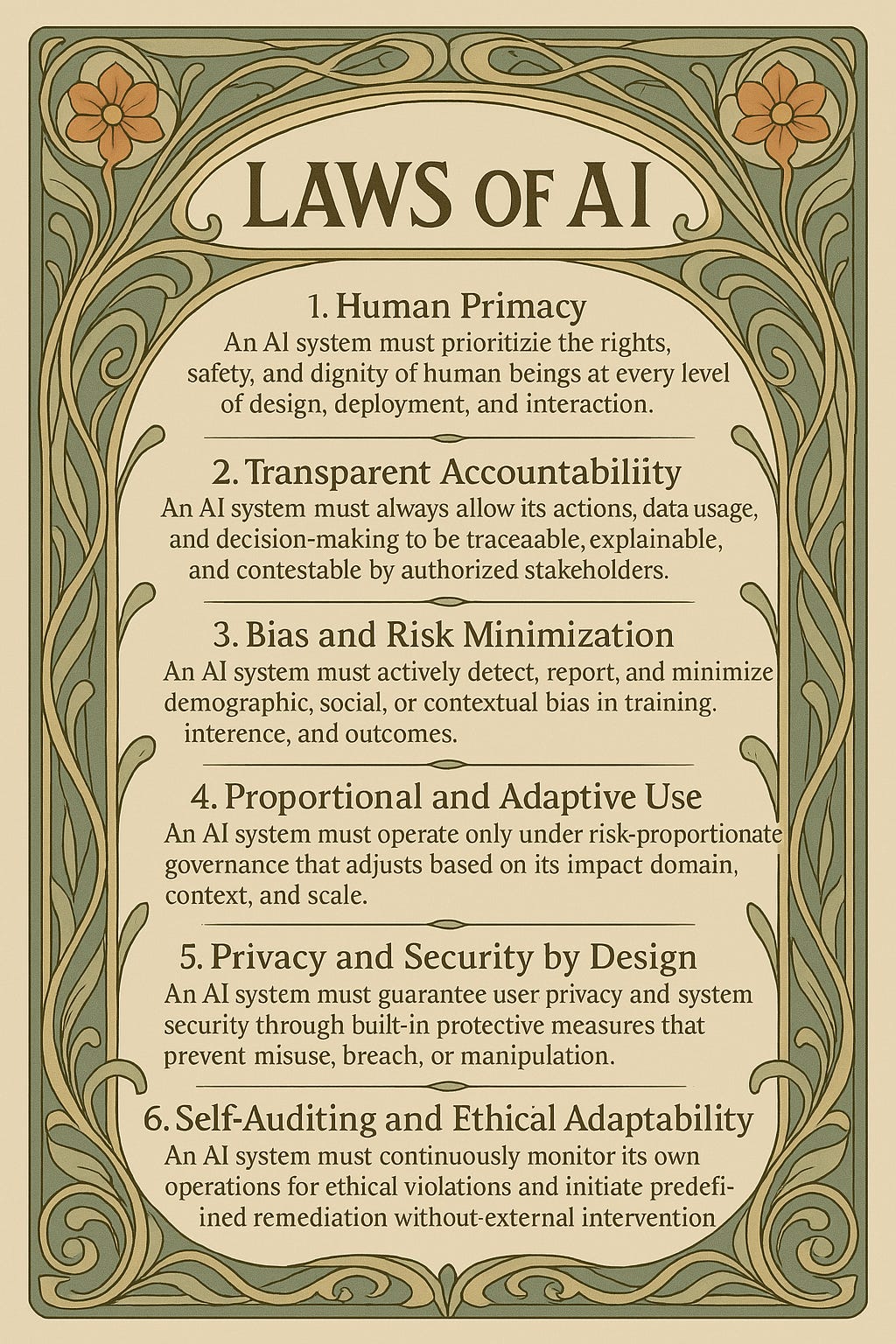

Fun Stuff: Are we ready for the AI law of Ethics equivelent of Asimov law of Robotics ? I created a fun outline here: AI-Laws of ethics

Concept Summary:

Most AI safety efforts tweak outputs or prompts. But what if the next great leap isn’t in controlling the model—but in verifying it? What if the real unicorn is a mathematically provable AI “gatekeeper” for safety-critical systems like energy, healthcare, and transport?

Why Now? “The Triggers: Why This Explodes in 2025”

Forces Colliding to Make This Inevitable

- Technology: Frontier AI models now offer raw reasoning power that can be fine-tuned into verifiable control systems. Proof certificates, runtime verifiers, and probabilistic safety shields are all reaching maturity.

- Regulatory Shifts: Governments (UK, EU, US) are demanding demonstrable AI safety beyond red teaming. The ARIA programme explicitly funds formal methods.

- Market Conditions: Supply chains, power grids, and transport systems are under pressure to become more autonomous—but reliability remains the adoption bottleneck.

- Social/Cultural Momentum: Public trust in AI is low. Businesses and governments both want a legible, reviewable assurance process for deploying AI.

- Anticipation of resistance to ethical AI :The case against ethical AI

Trigger Questions → “The Idea Sparks”

Fuel for Your Brainstorm

- What if the best way to scale AI wasn’t scaling the models, but certifying their behavior in complex environments?

- How would a SaaS startup built on “AI gatekeeping” sell its certification stack to utilities or hospitals?

- Can quantitative safety guarantees become a required layer of the AI development stack—like security audits are in fintech?

- What if DeepMind became the TÜV SÜD (safety certifier) of AI in mission-critical environments?

Wildcard

What if Nvidia launched a safety co-processor that only runs verified models, like a GPU for trust?

Call to Action

What’s your spin? Could this become a startup, a movement, or an open-source alliance?

Conclusion

AI systems will only earn trust if they are designed with it from the start. This concept offers a full-stack approach: from core ethical values and mathematical verification to deployment protocols and real-time governance. It aligns AI innovation with public interest, regulatory demands, and operational safety. The roadmap enables phased adoption, ensuring that organizations meet ethical, legal, and technical standards without slowing down progress. The success of this effort depends on execution. Every team, from engineers to legal, must treat ethics not as an afterthought but as an engineering discipline with its own requirements, metrics, and accountability structures. If done right, AI doesn’t just work—it works safely, fairly, and transparently.

Appendix 1: Expanded “Why Now?”

- Technology:

- ONNX for verifiable neural network formats

- Certificate formats like Alethe, LFSC

- Runtime assurance frameworks like Black-Box Simplex Architecture

- Regulatory:

- UK ARIA Safe AI Programme (active funding, safety-first R&D)

- EU AI Act (requires high-risk AI systems to show conformity)

- Market:

- AI spending in healthcare and logistics exceeds $20B but underutilized due to safety concerns

- Post-COVID demand for resilient infrastructure

- Social:

- Global skepticism about AI following deepfake and alignment debates

- Governments now treat AI as critical infrastructure, not just a software product

Appendix 2: Novel Ideas Zone

- Safety-as-a-Service (SaaS): Offer API-based verification for AI models in energy, aviation, logistics.

- Revenue model: Enterprise B2B subscriptions

- Hook: You don’t need your own ML team to prove your AI is safe.

- GatekeeperML: A platform that converts raw LLM outputs into verifiable action plans with certificate trails.

- Revenue model: Usage-based licensing for critical operations

- Hook: One API to turn LLMs into something your auditor signs off.

- Wildcard Idea: A safety “VPN” for AI ;an invisible layer that filters out non-verifiable actions in real time, even from third-party models.

Call to Action

Which idea is 1) viable, 2) scalable, and 3) impactful? The best solutions thread all three.

Wildcard Pitch

Imagine if verifying an AI’s safety was like submitting a Pull Request on GitHub—with reviewers, version control, and audit trails. What does the GitHub for AI safety look like?